SelfRemaster: Self-Supervised Speech Restoration with Analysis-by-Synthesis Approach Using Channel Modeling

Paper

arXiv preprint

Author

Takaaki Saeki,

Shinnosuke Takamichi,

Tomohiko Nakamura,

Naoko Tanji,

Hiroshi Saruwatari

(The University of Tokyo, Japan)

Abstract

We present a self-supervised speech restoration method without paired speech corpora. Because the previous general speech restoration method uses artificial paired data created by applying various distortions to high-quality speech corpora, it cannot sufficiently represent acoustic distortions of real data, limiting the applicability. Our model consists of analysis, synthesis, and channel modules that simulate the recording process of degraded speech and is trained with real degraded speech data in a self-supervised manner. The analysis module extracts distortionless speech features and distortion features from degraded speech, while the synthesis module synthesizes the restored speech waveform, and the channel module adds distortions to the speech waveform. Our model also enables audio effect transfer, in which only acoustic distortions are extracted from degraded speech and added to arbitrary high-quality audio. Experimental evaluations with both simulated and real data show that our method achieves significantly higher-quality speech restoration than the previous supervised method, suggesting its applicability to real degraded speech materials.

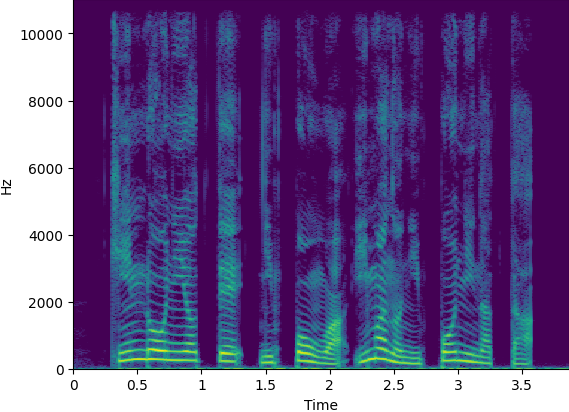

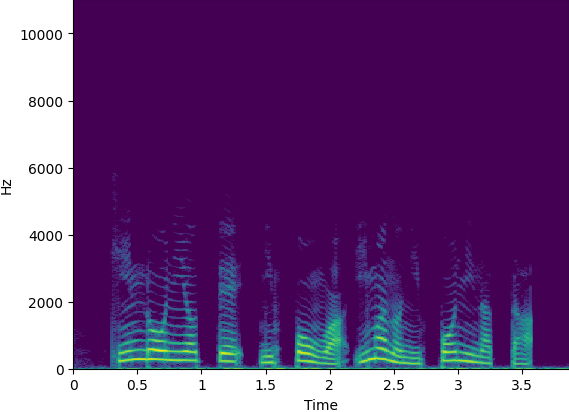

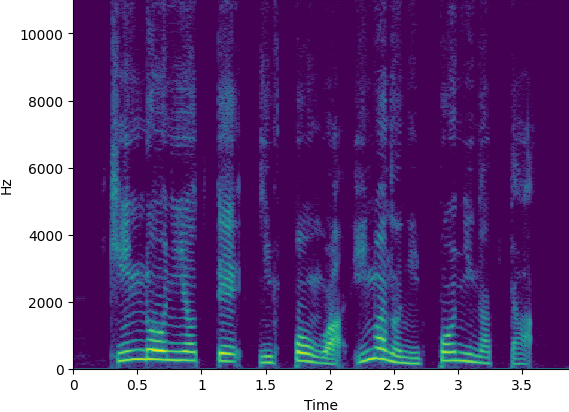

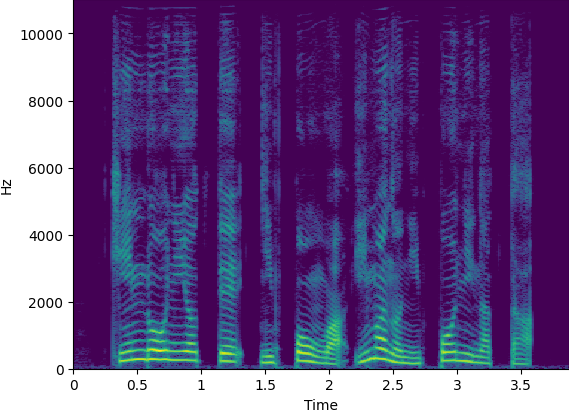

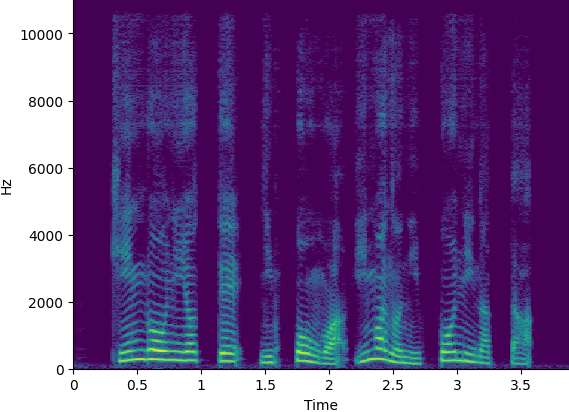

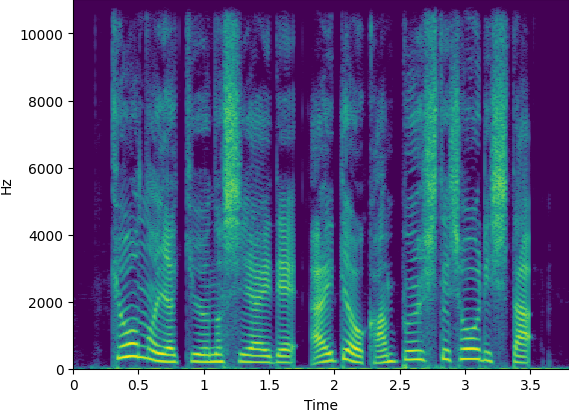

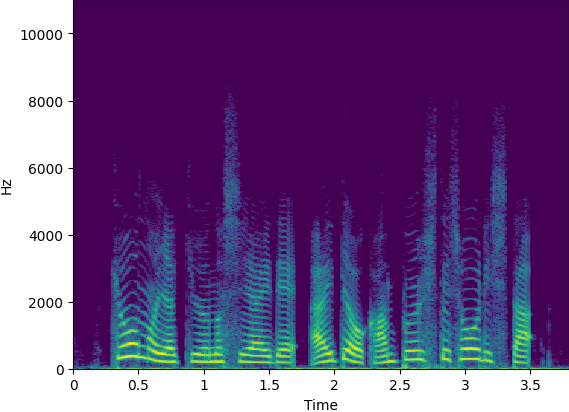

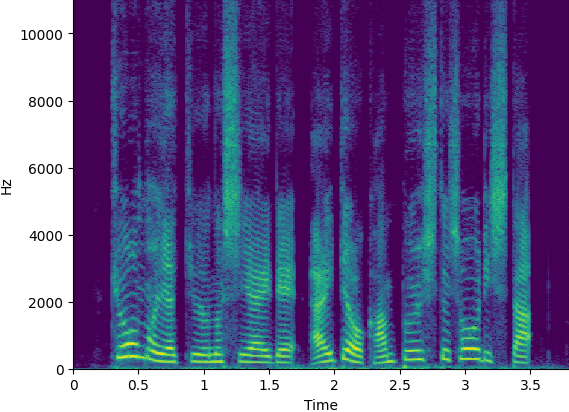

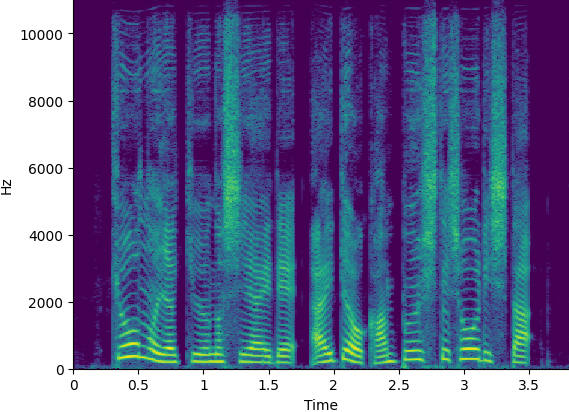

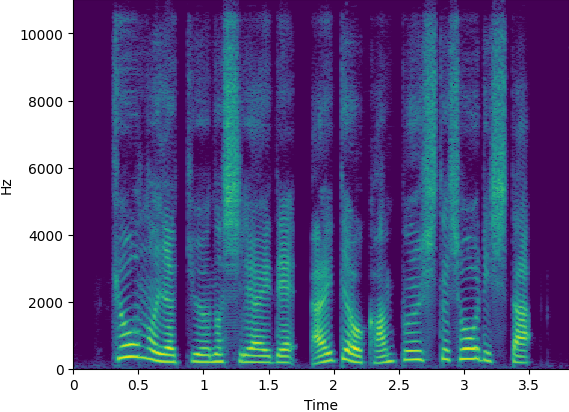

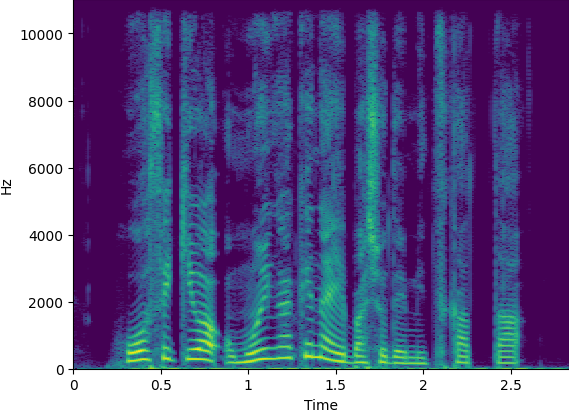

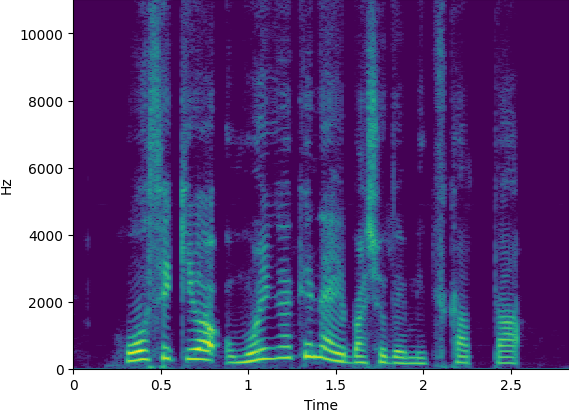

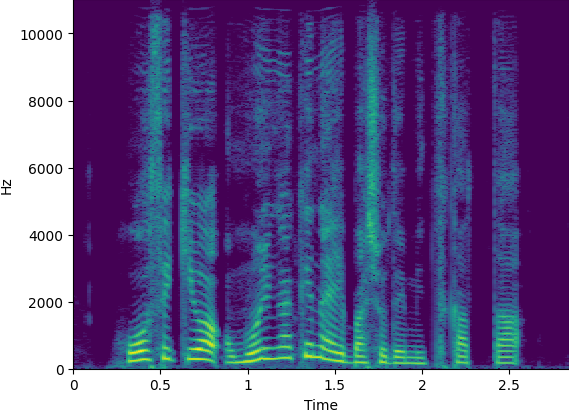

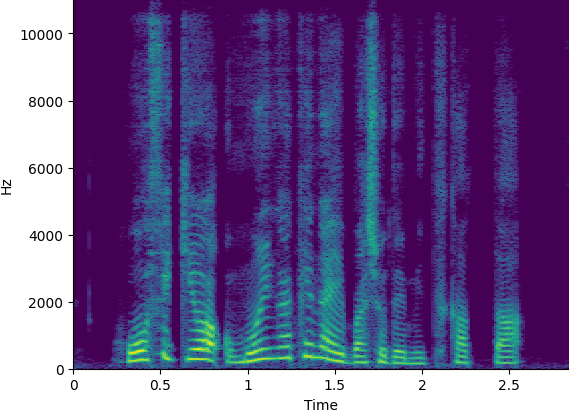

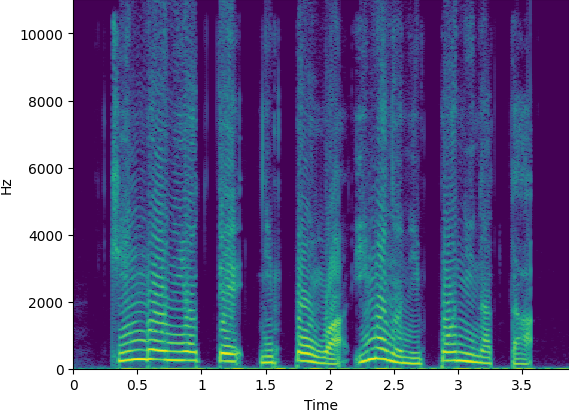

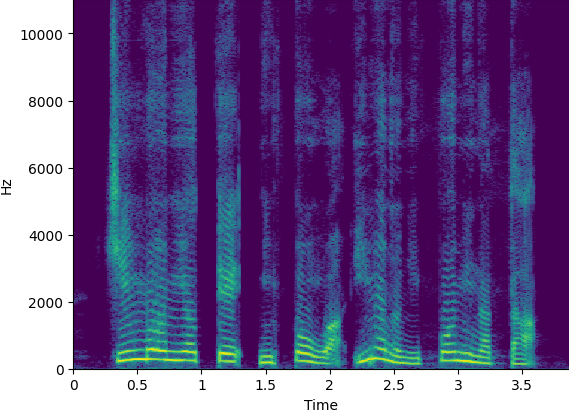

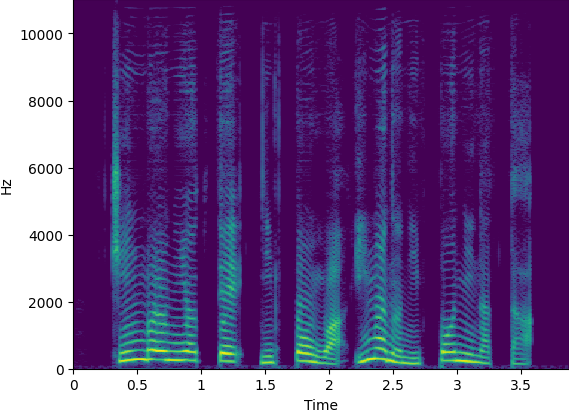

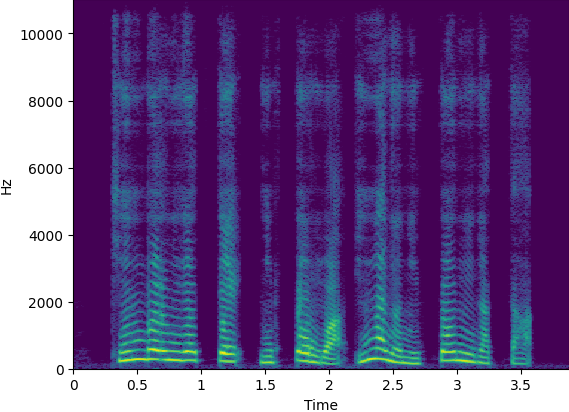

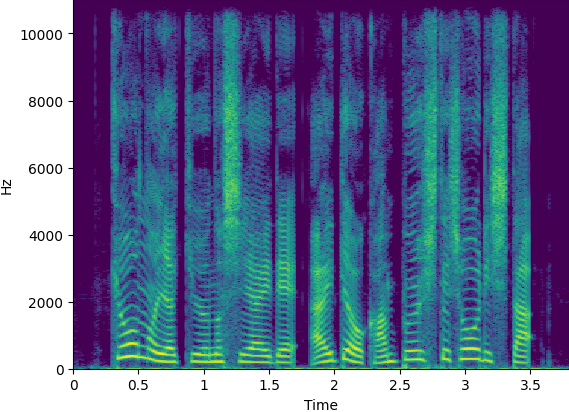

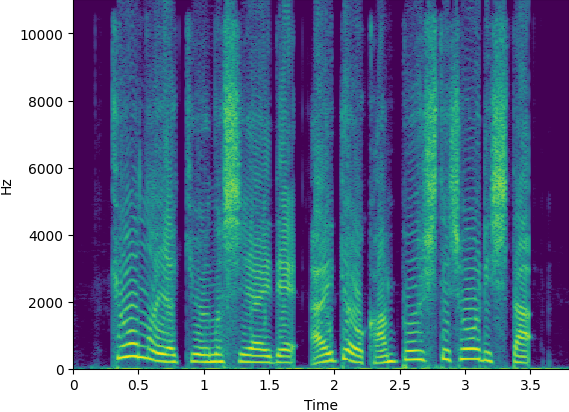

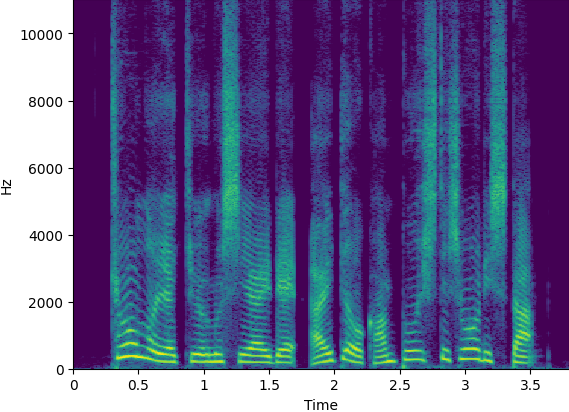

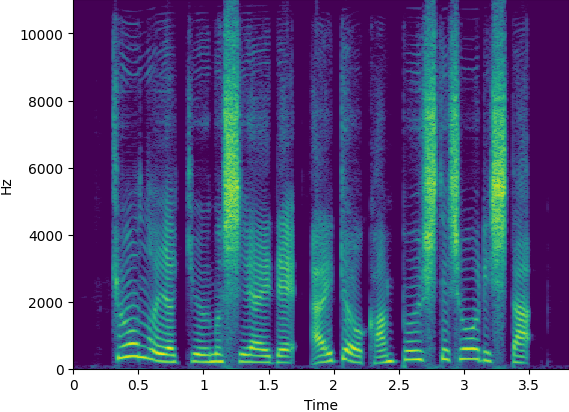

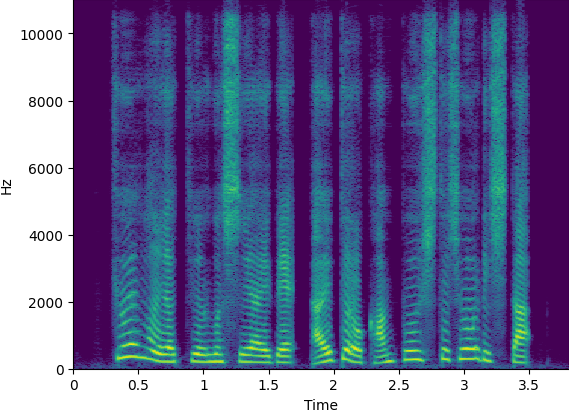

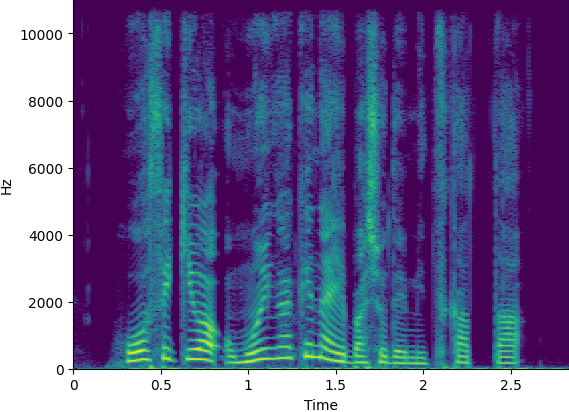

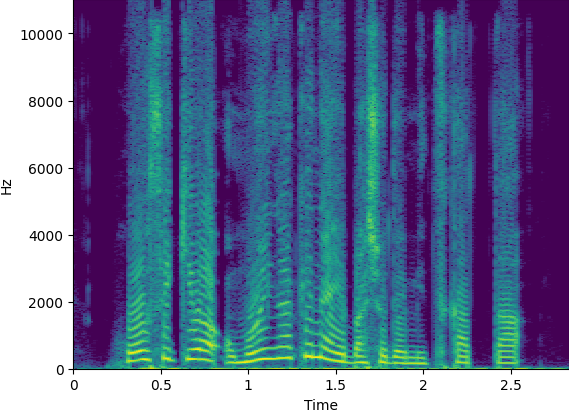

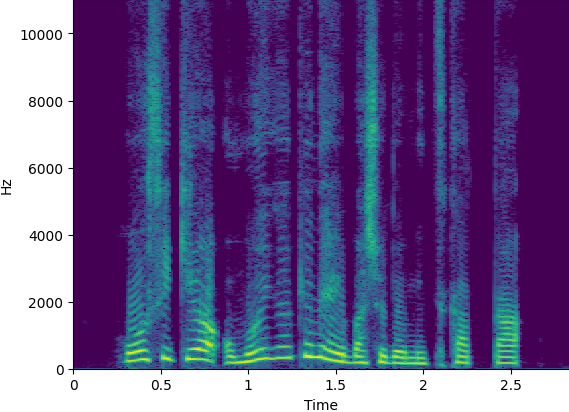

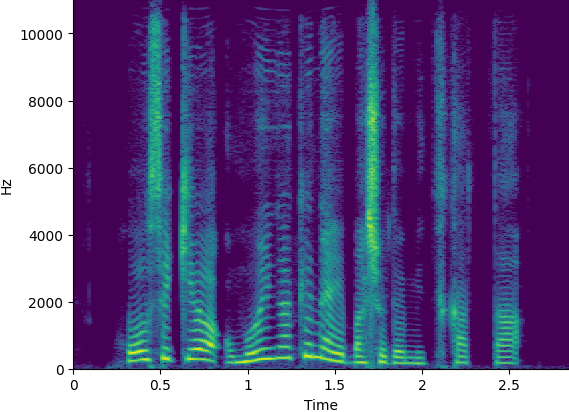

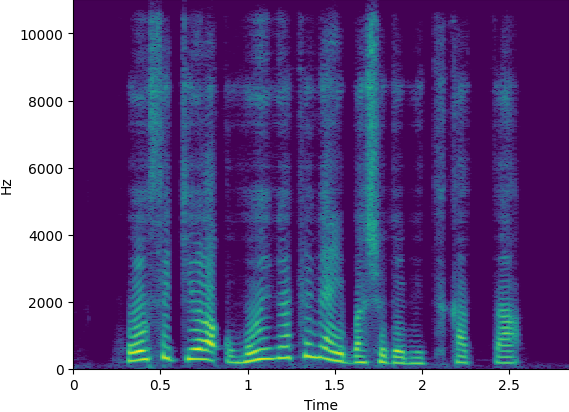

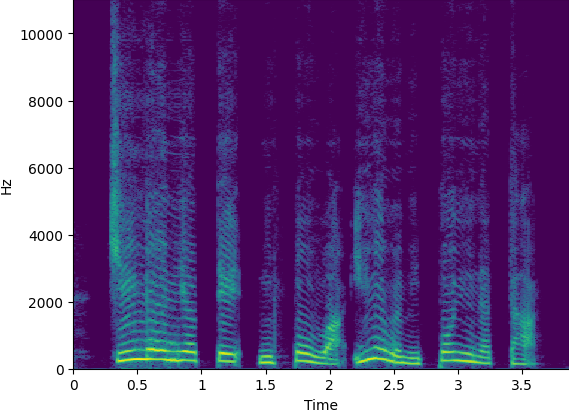

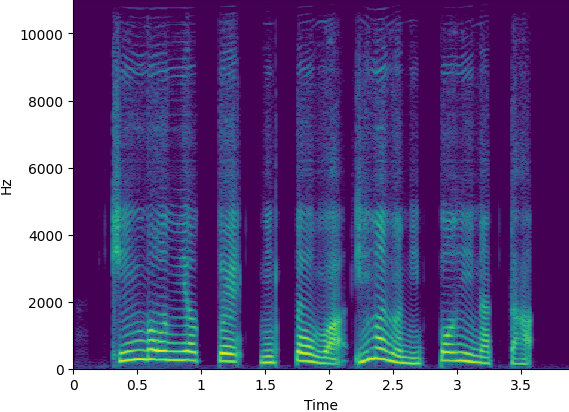

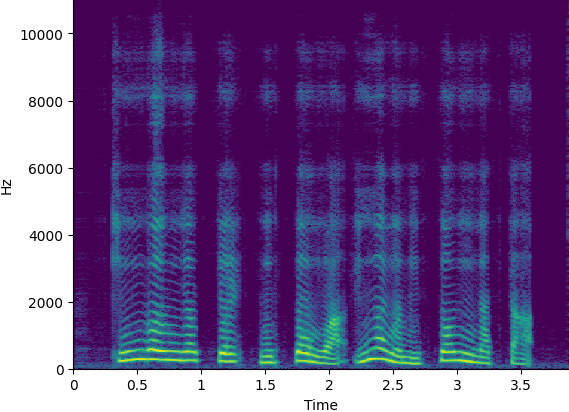

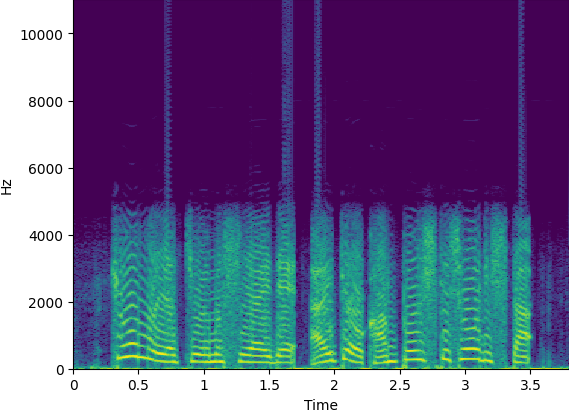

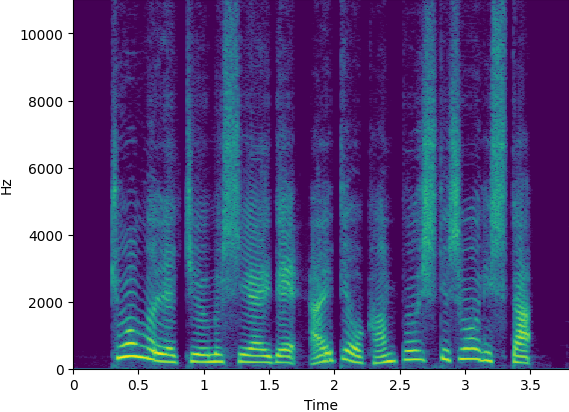

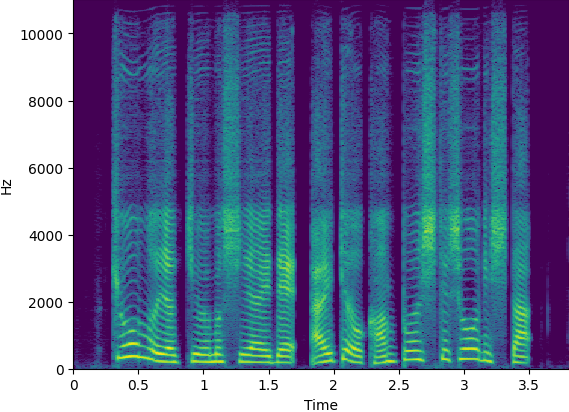

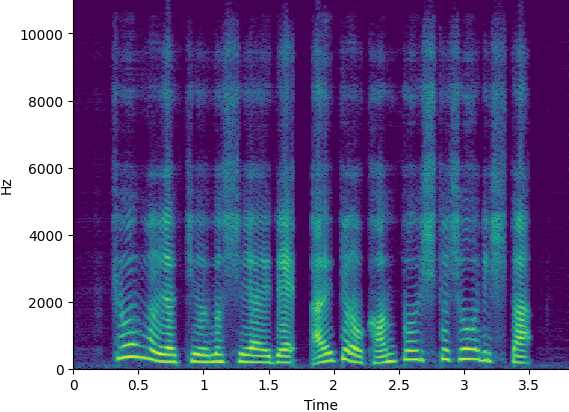

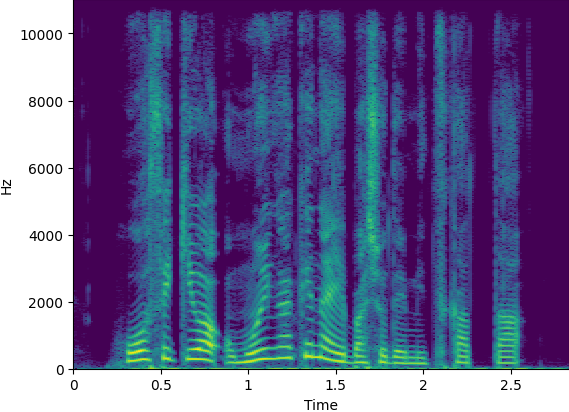

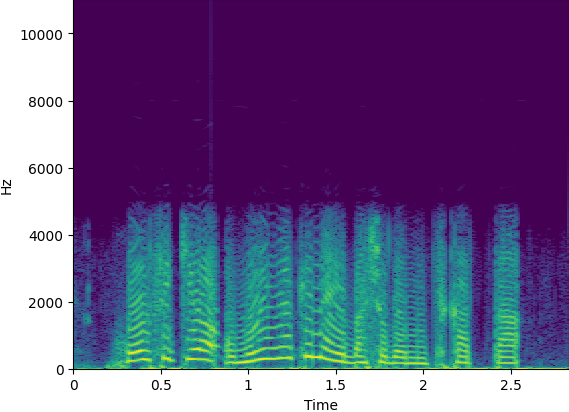

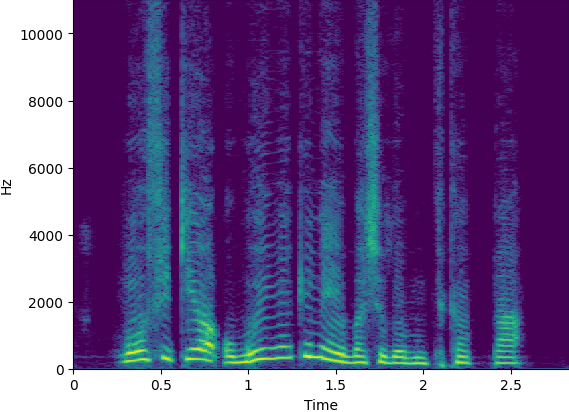

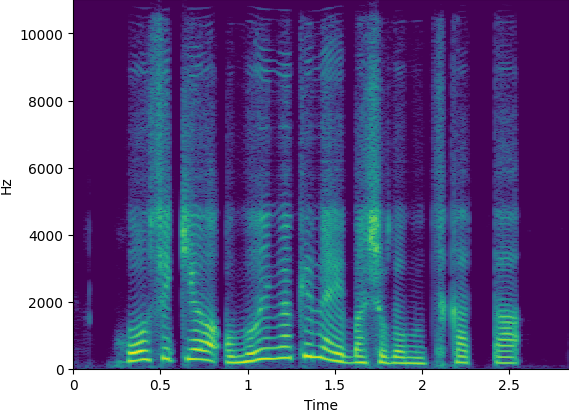

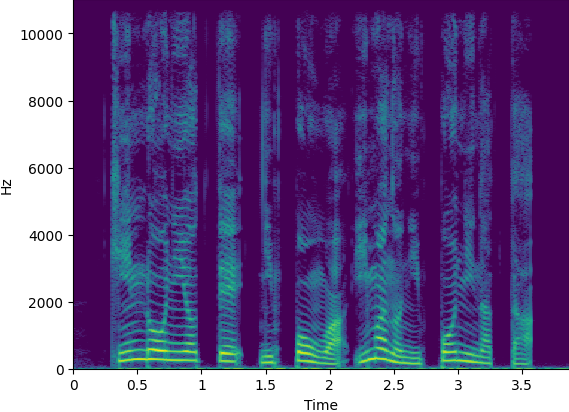

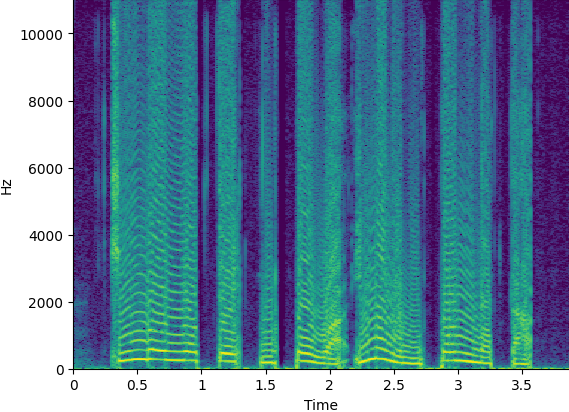

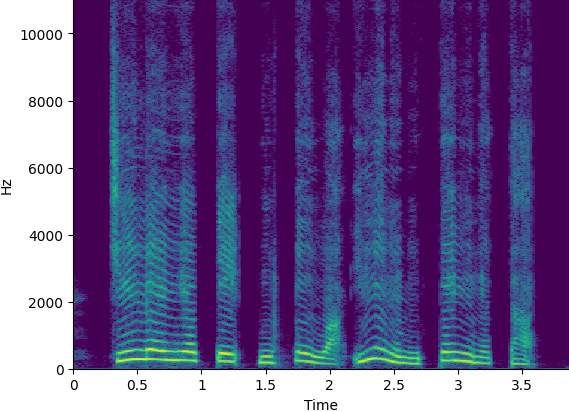

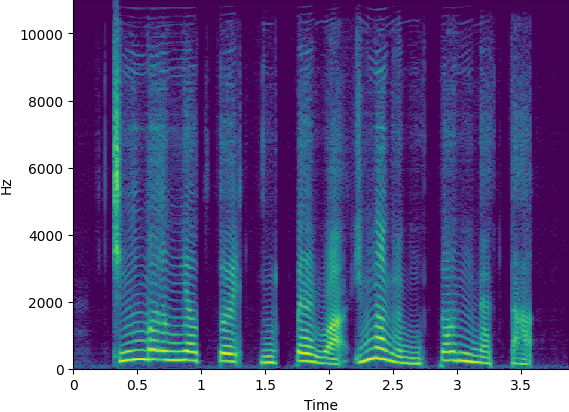

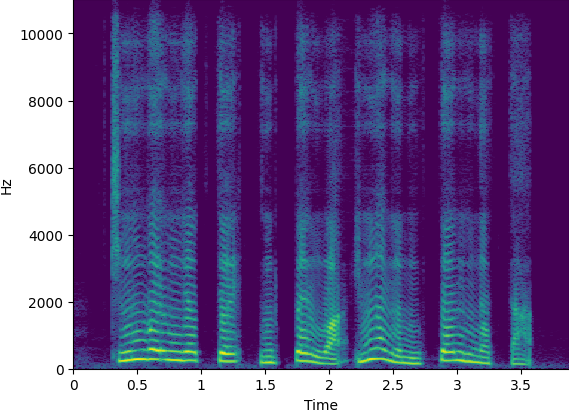

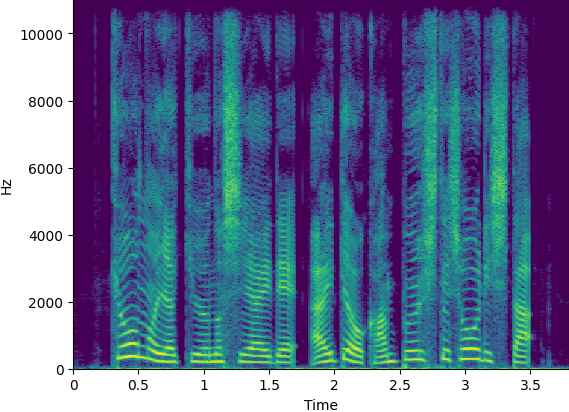

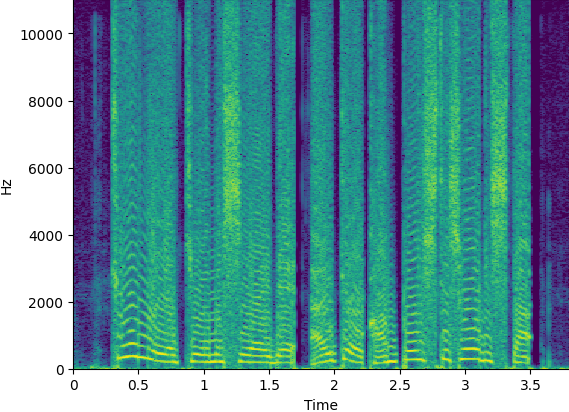

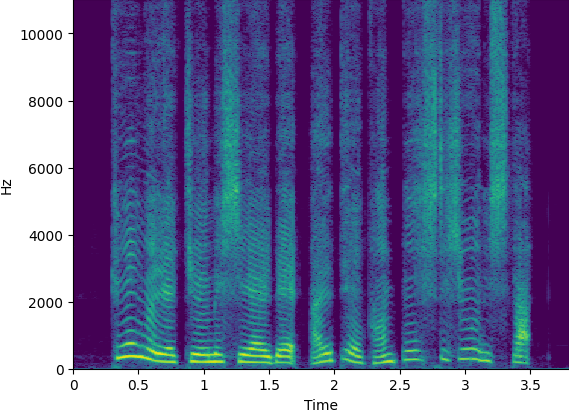

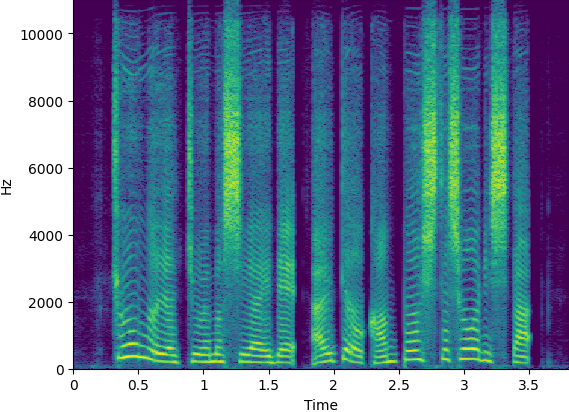

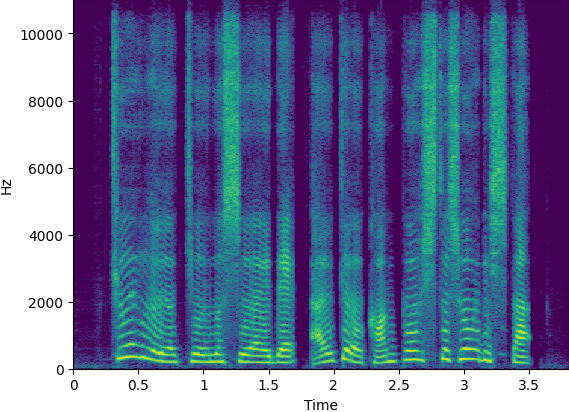

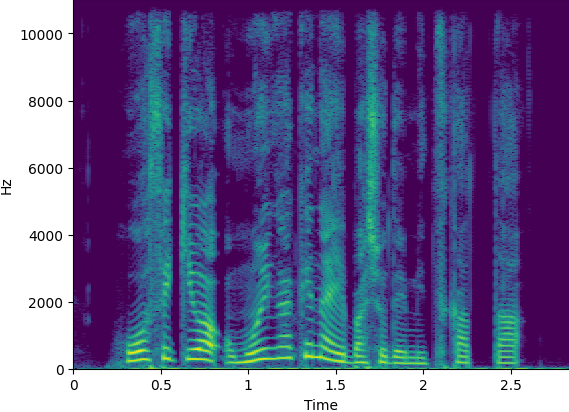

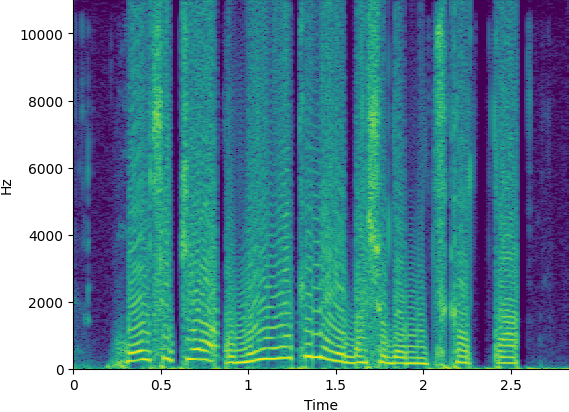

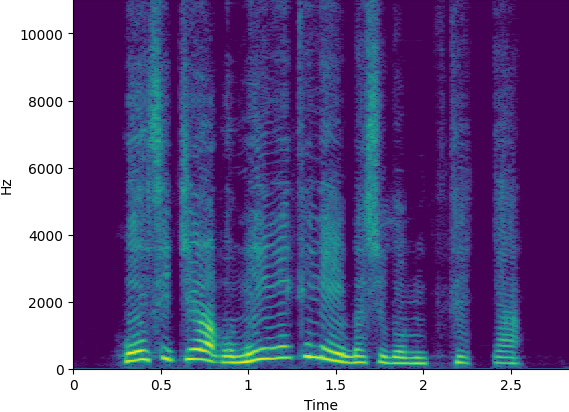

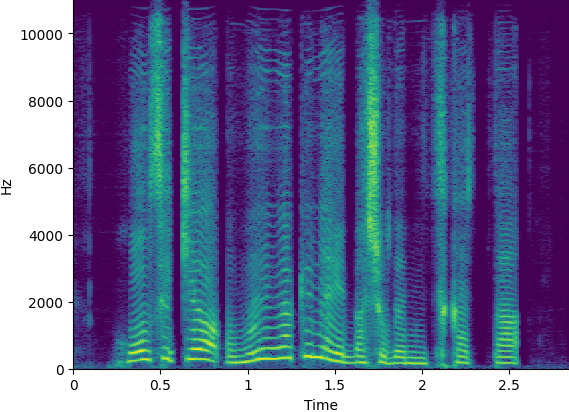

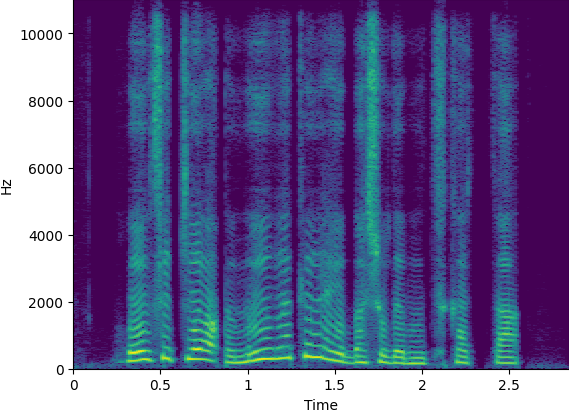

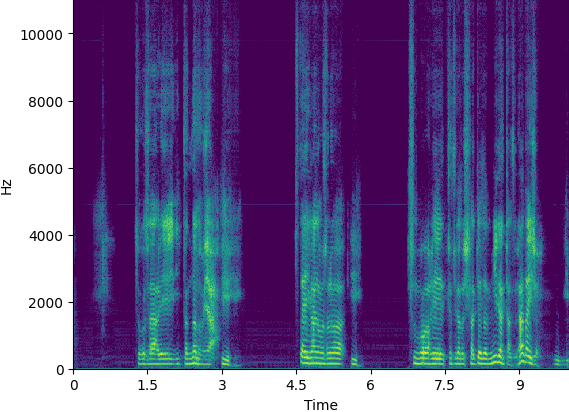

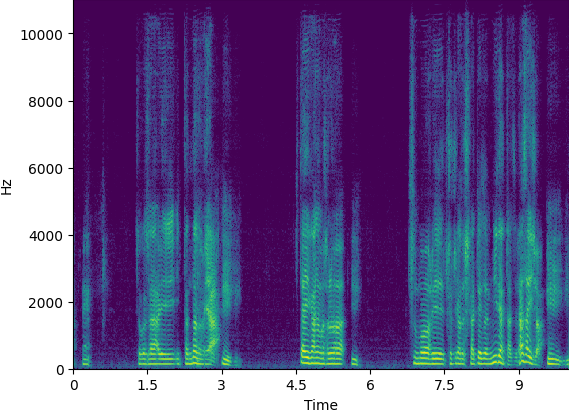

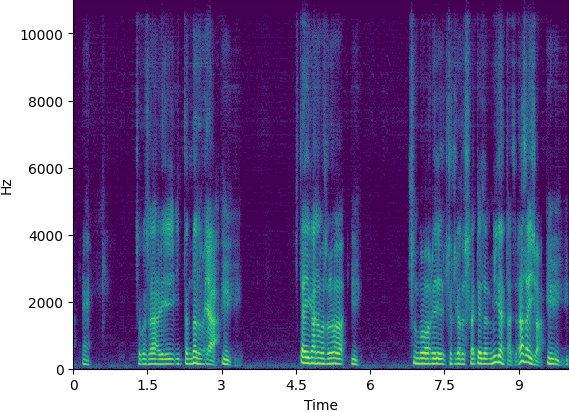

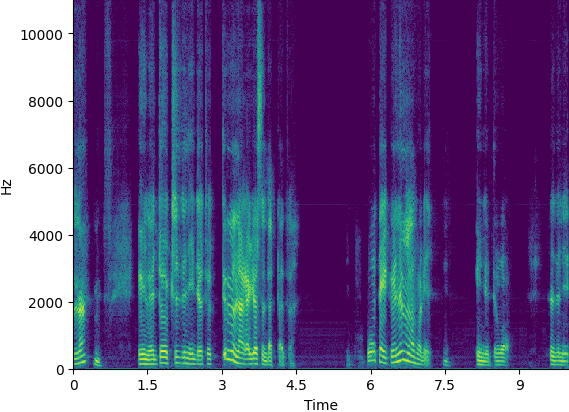

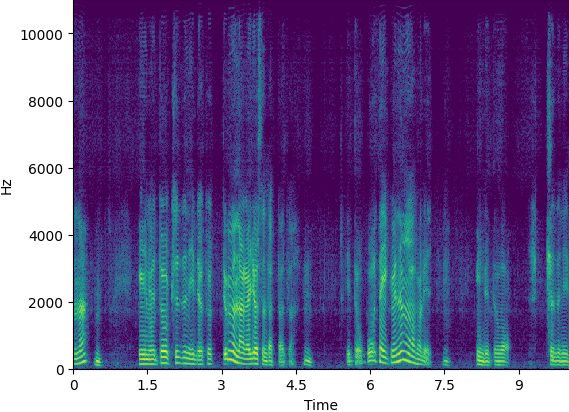

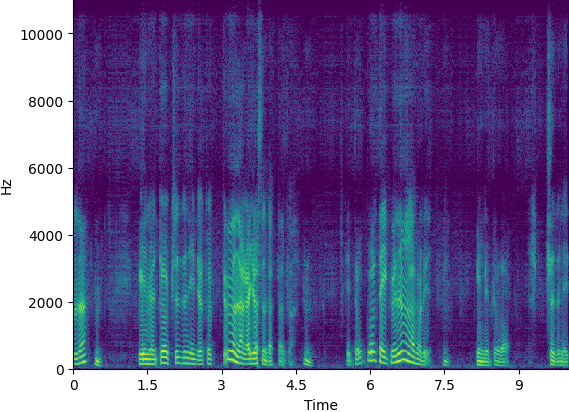

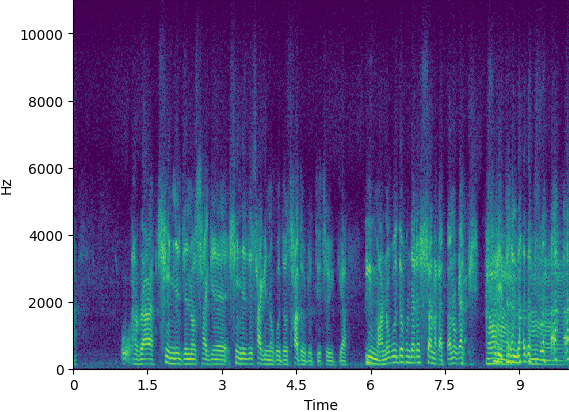

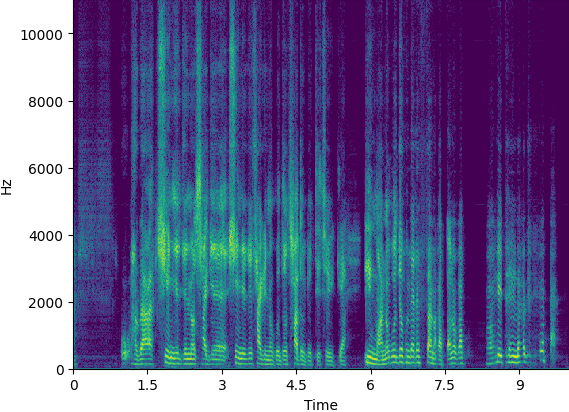

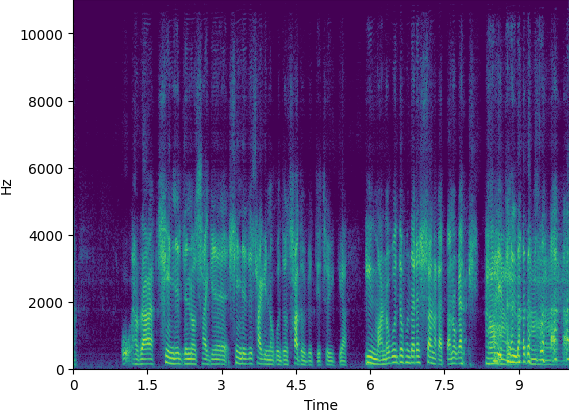

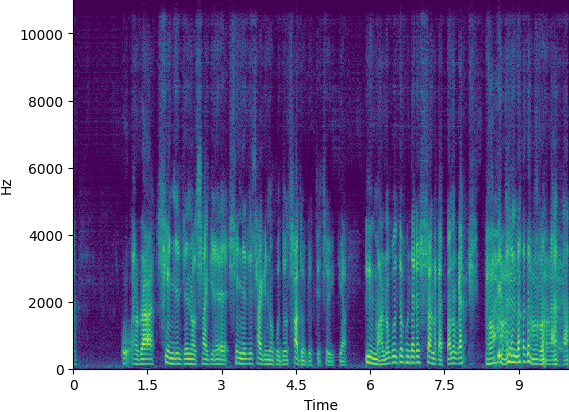

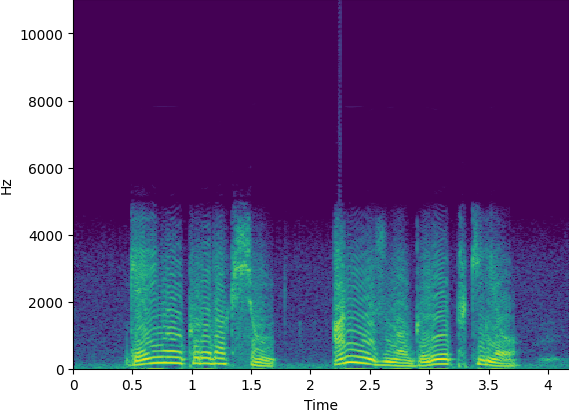

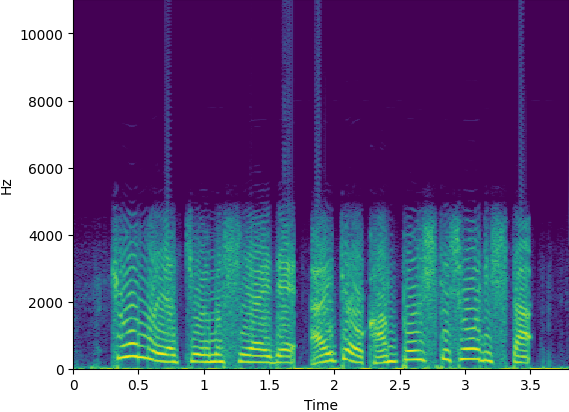

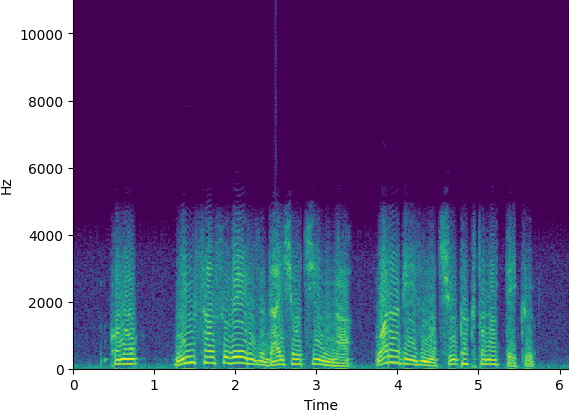

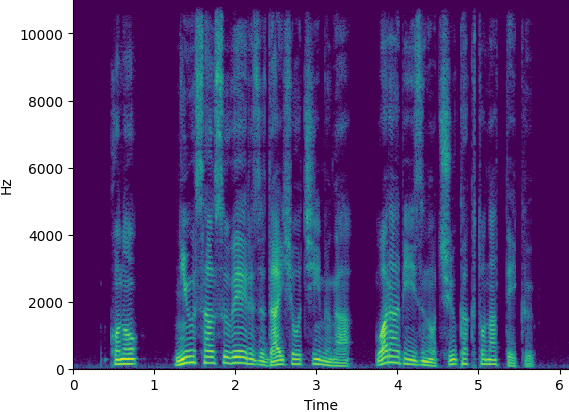

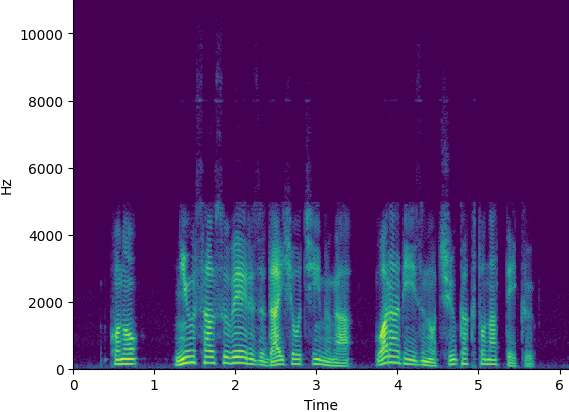

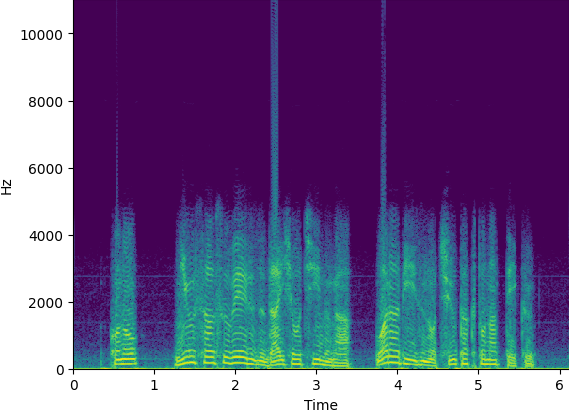

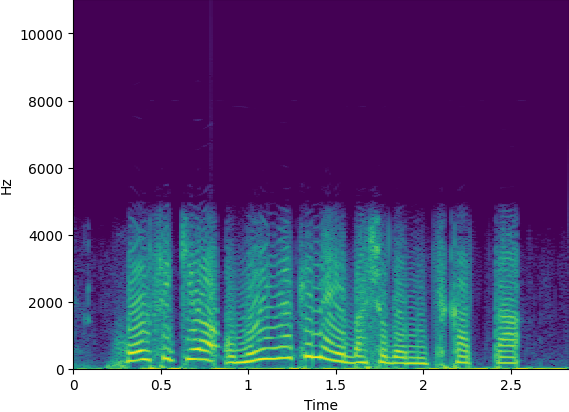

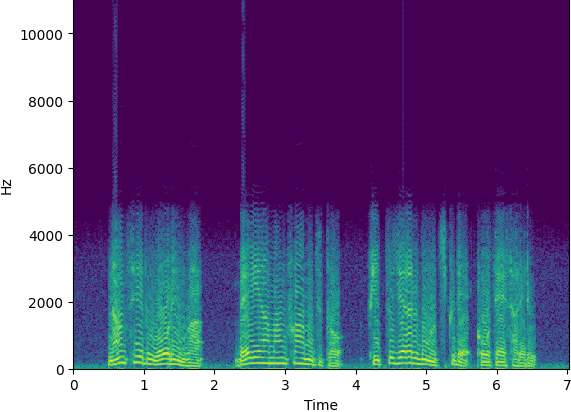

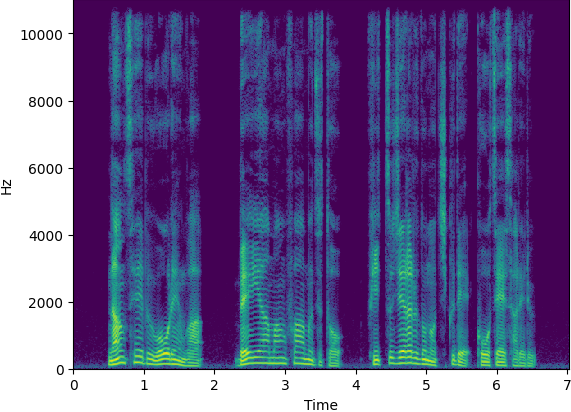

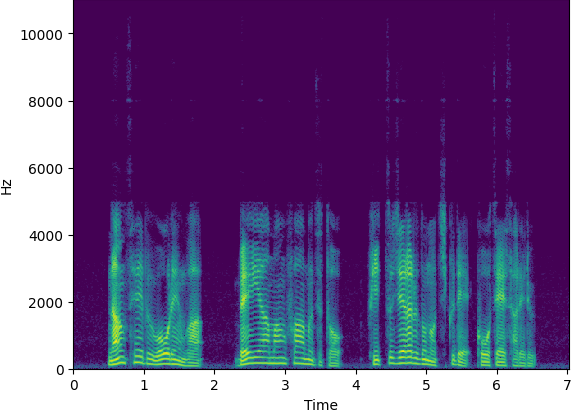

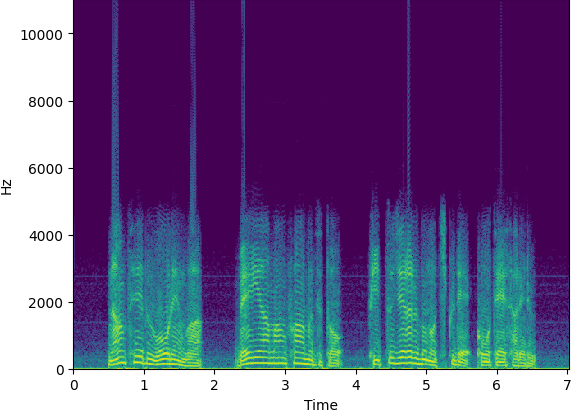

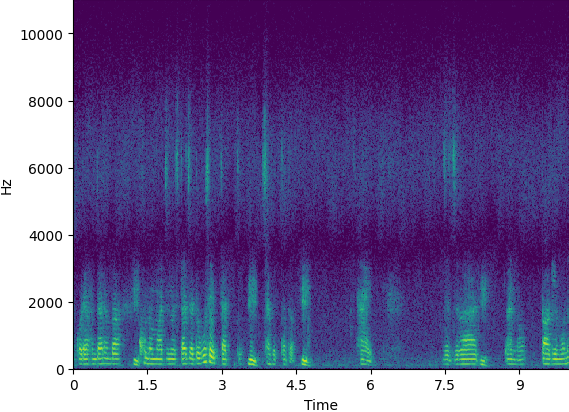

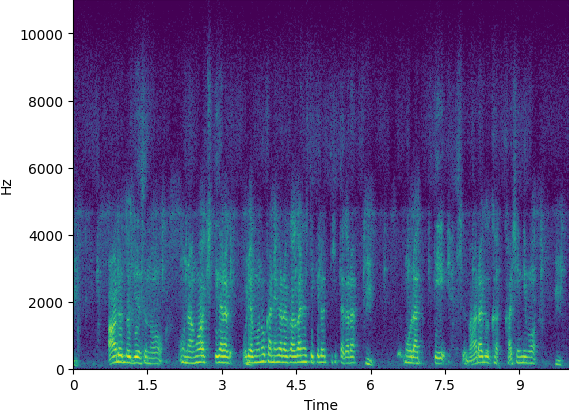

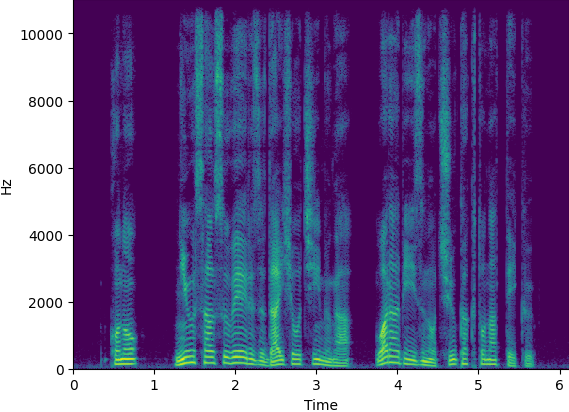

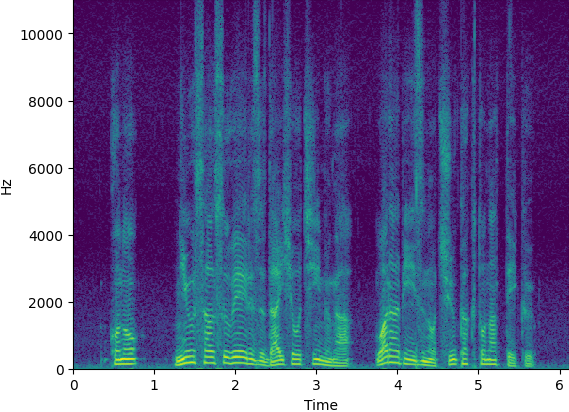

1. Speech restoration demo with simulated data

(1.a) Band-limited| Utterance | Groundtruth | Input | Liu, et al. [1] | SSL-dual (MelSpec) | SSL-dual (SourceFilter) |

|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

| Sample2 |  |

|

|

|

|

| Sample3 |  |

|

|

|

|

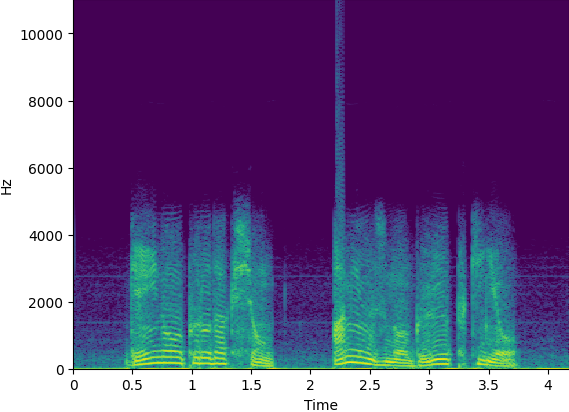

(1.b) Clipped

| Utterance | Groundtruth | Input | Liu, et al. [1] | SSL-dual (MelSpec) | SSL-dual (SourceFilter) |

|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

| Sample2 |  |

|

|

|

|

| Sample3 |  |

|

|

|

|

(1.c) Quantized & Resampled

| Utterance | Groundtruth | Input | Liu, et al. [1] | SSL-dual (MelSpec) | SSL-dual (SourceFilter) |

|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

| Sample2 |  |

|

|

|

|

| Sample3 |  |

|

|

|

|

(1.d) Overdrive

| Utterance | Groundtruth | Input | Liu, et al. [1] | SSL-dual (MelSpec) | SSL-dual (SourceFilter) |

|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

| Sample2 |  |

|

|

|

|

| Sample3 |  |

|

|

|

|

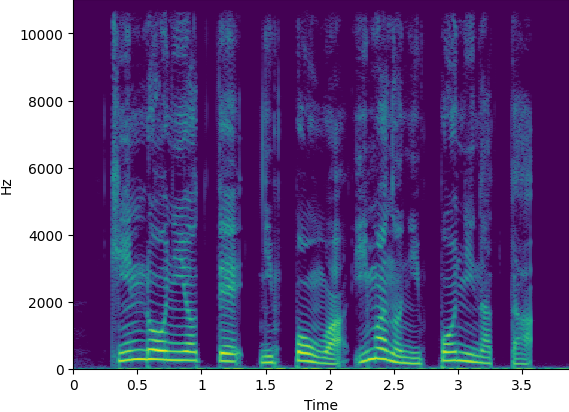

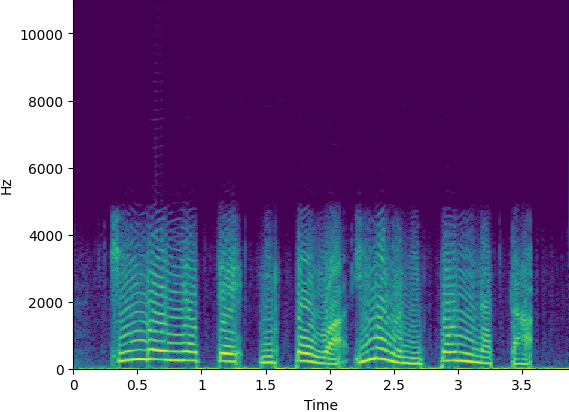

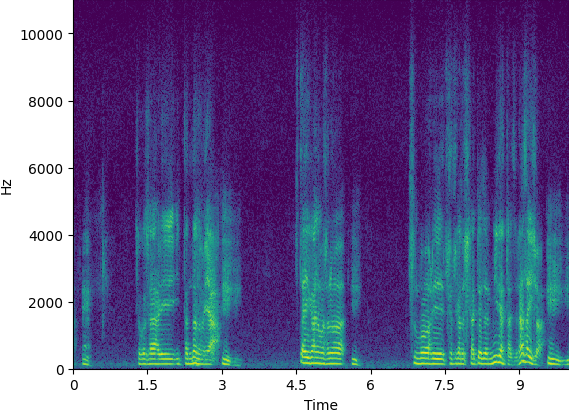

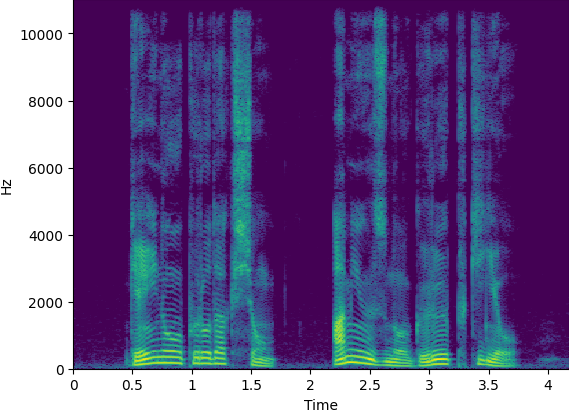

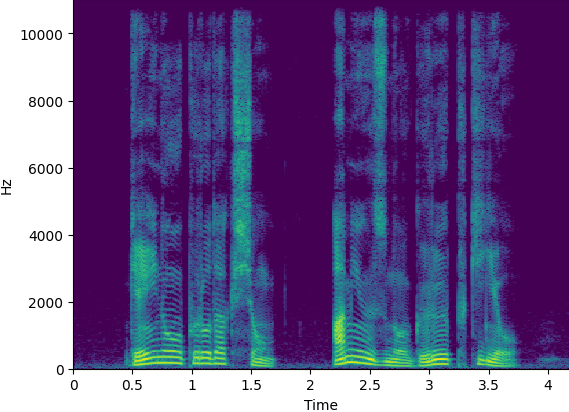

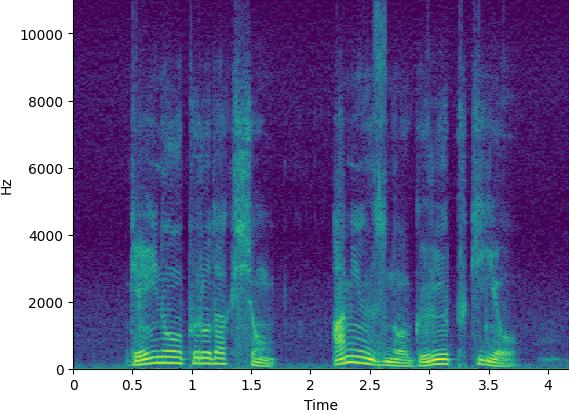

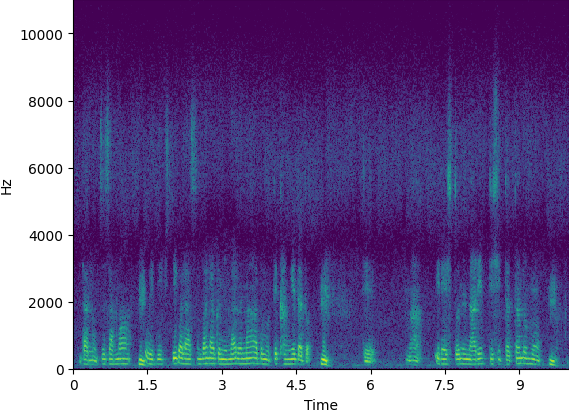

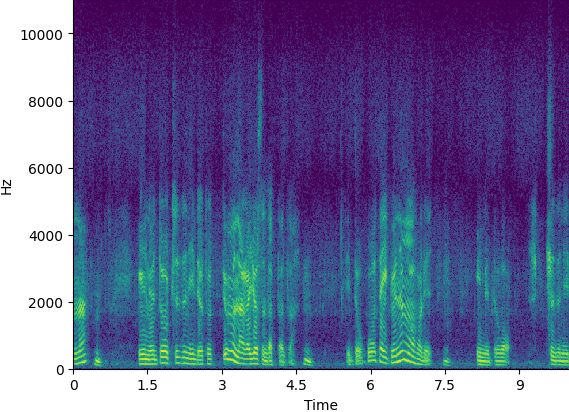

2. Speech resoration demo with real data

(2.a) Story telling of Japanese old tales| Utterance | Input | Liu, et al. [1] | SSL-dual-pre (MelSpec) | SSL-dual-pre (SourceFilter) |

|---|---|---|---|---|

| Sample1 |  |

|

|

|

| Sample2 |  |

|

|

|

| Sample3 |  |

|

|

|

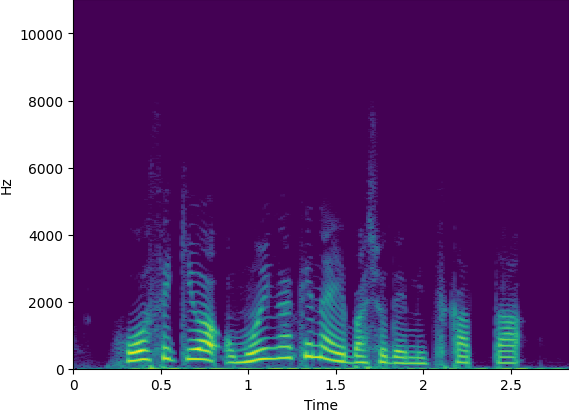

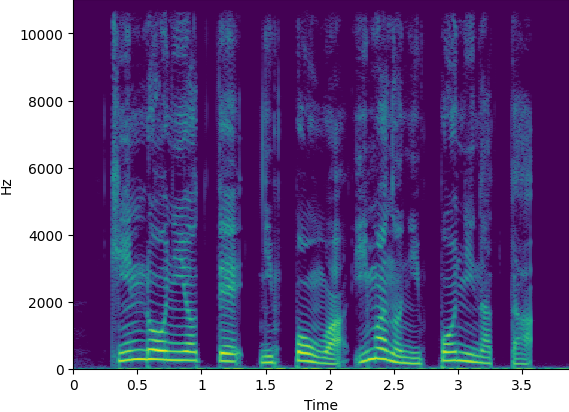

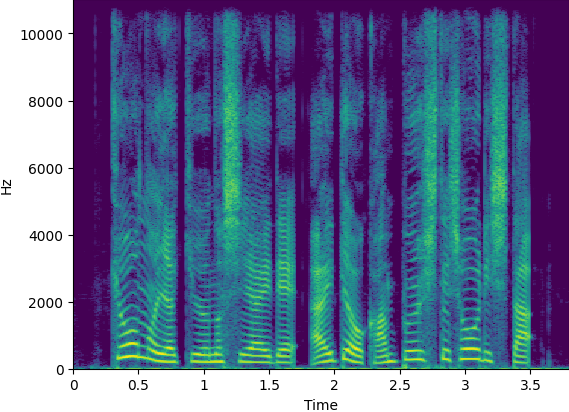

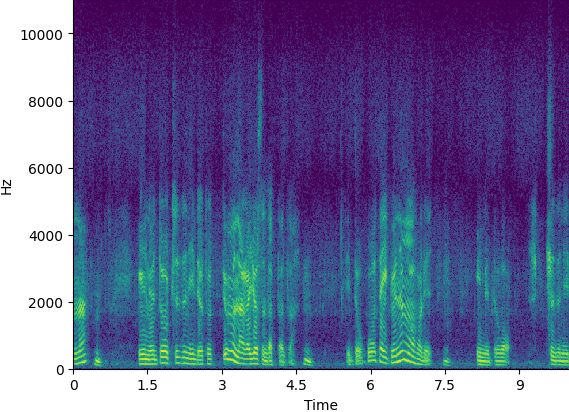

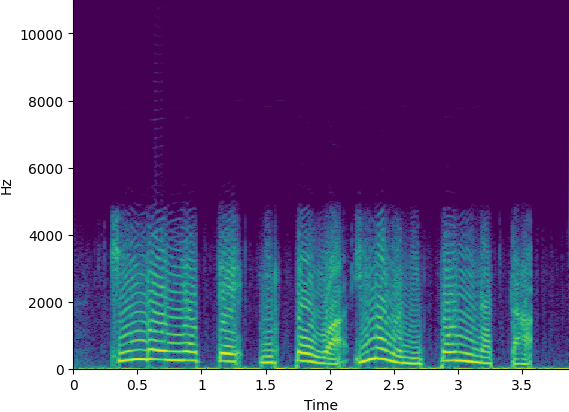

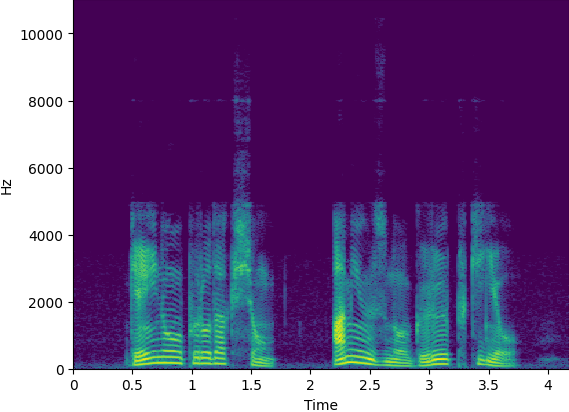

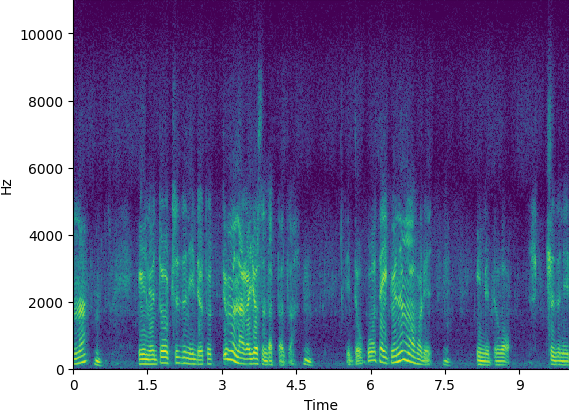

3. Audio effect transfer demo

(3.a) Simulated data (Quantized & Resampled)| Utterance | Source | Reference | High-quality | Mean spec. diff. | Proposed |

|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

| Sample2 |  |

|

|

|

|

| Sample3 |  |

|

|

|

|

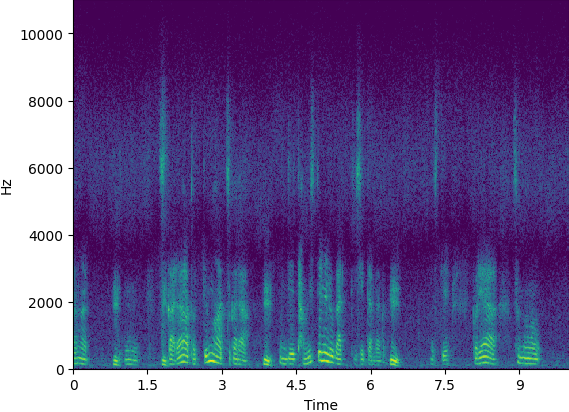

(3.b) Real data (Story telling of Japanese old tales)

| Utterance | Source | Reference | High-quality | Proposed |

|---|---|---|---|---|

| Sample1 |  |

|

|

|

| Sample2 |  |

|

|

|

| Sample3 |  |

|

|

|

References

- H. Liu et al., "Voicefixer: Toward general speech restoration with neural vocoder,"

arXiv , vol. abs/2109.13731, 2021