SelfRemaster: Self-Supervised Speech Restoration for Historical Audio Resources

Paper

Comming soon. (Accepted by IEEE Access)

Author

Takaaki Saeki,

Shinnosuke Takamichi,

Tomohiko Nakamura,

Naoko Tanji,

Hiroshi Saruwatari

(The University of Tokyo, Japan)

Abstract

Restoring high-quality speech from degraded historical recordings is crucial for the preser- vation of cultural and endangered linguistic resources. A key challenge in this task is the scarcity of paired training data that replicates the original acoustic conditions of the historical audio. While previous approaches have used pseudo paired data generated by applying various distortions to clean speech corpora, their limitations stem from the inability to authentically simulate the acoustic variations in historical recordings. In this paper, we propose a self-supervised approach to speech restoration that does not require paired corpora. Our model has three main modules: analysis, synthesis, and channel modules, all of which are designed to emulate the recording process of degraded audio signals. Specifically, the analysis module disentangles undistorted speech and distortion features, and the synthesis module generates the restored speech waveform. The channel module then introduces distortions into the speech waveform to compute the reconstruction loss between the input and output degraded speech signals. We further improve our model by introducing several methods including dual learning and semi-supervised training frameworks. An additional feature of our model is the audio effect transfer, which allows acoustic distortions from degraded audio signals to be applied to arbitrary audio signals. Experimental evaluations demonstrate that our method significantly outperforms the previous supervised method for the restoration of real historical speech resources.

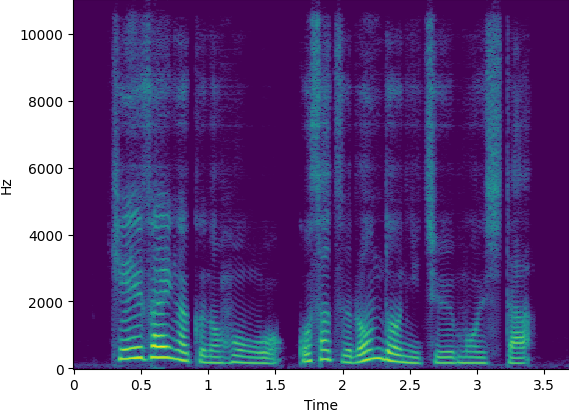

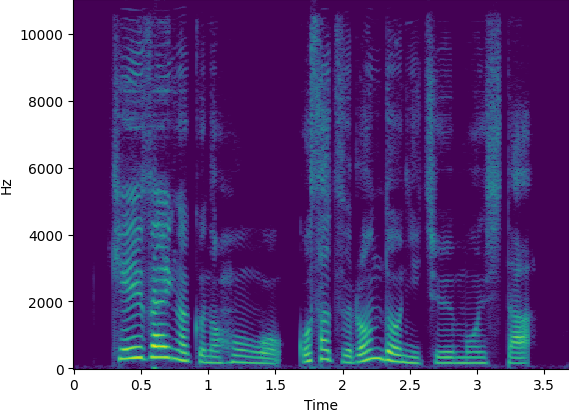

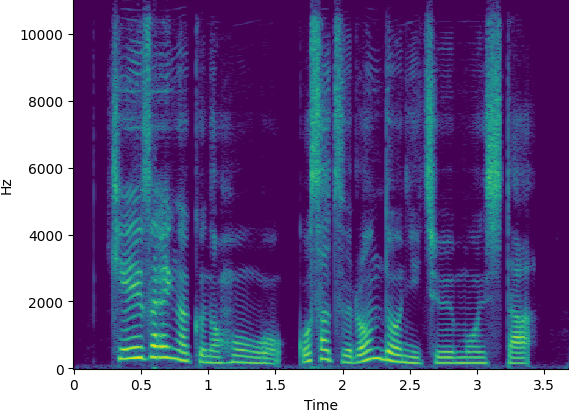

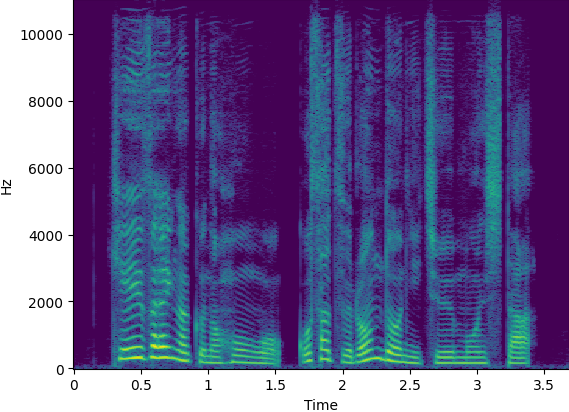

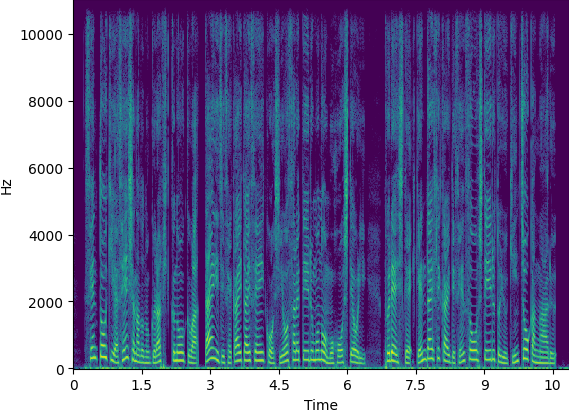

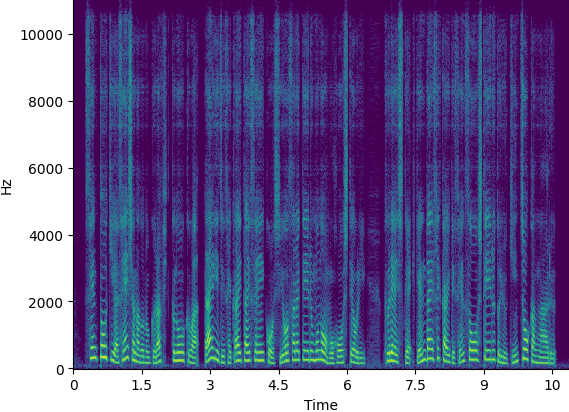

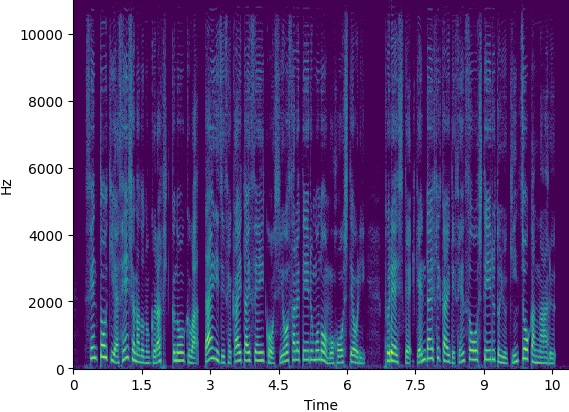

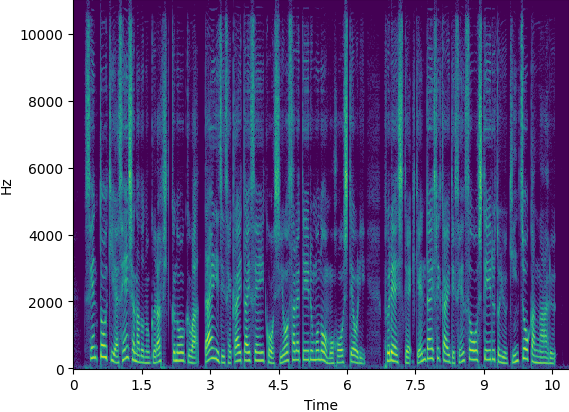

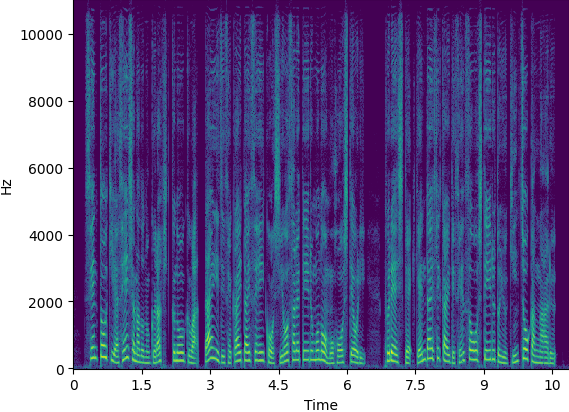

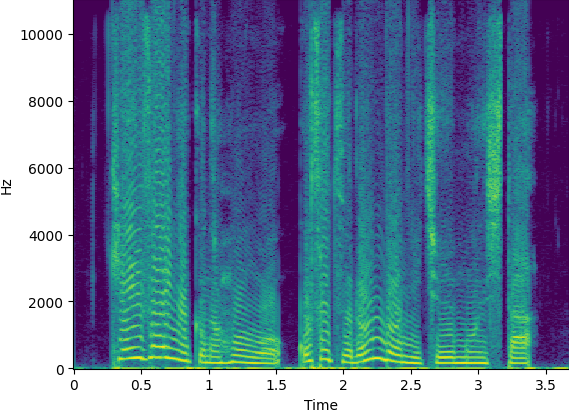

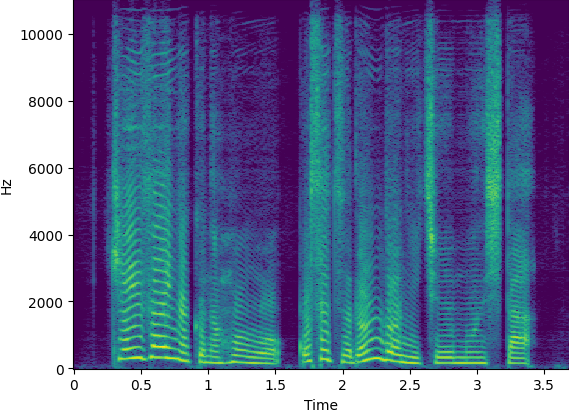

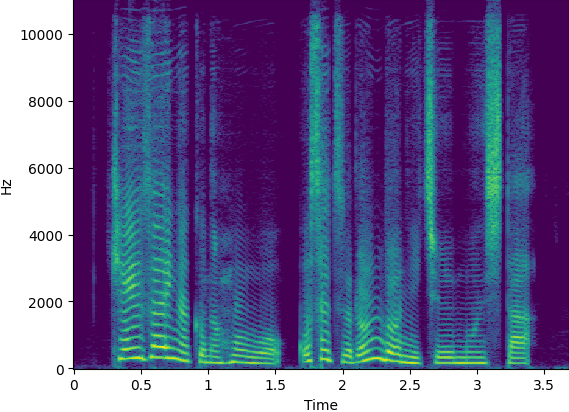

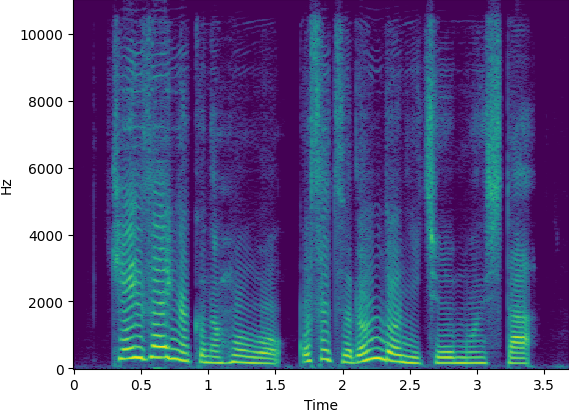

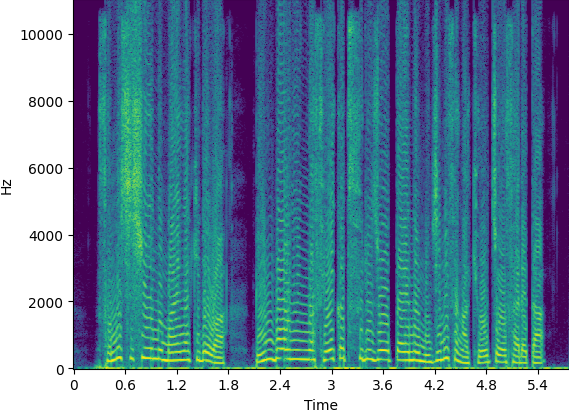

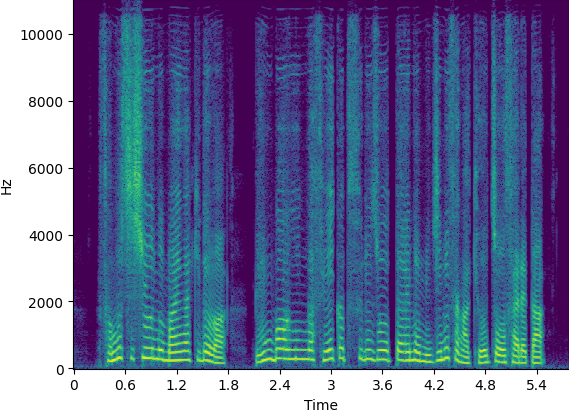

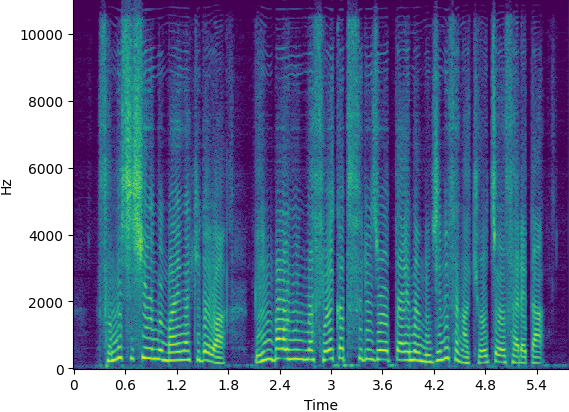

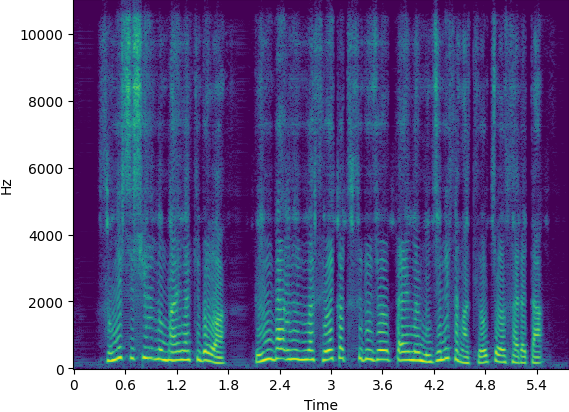

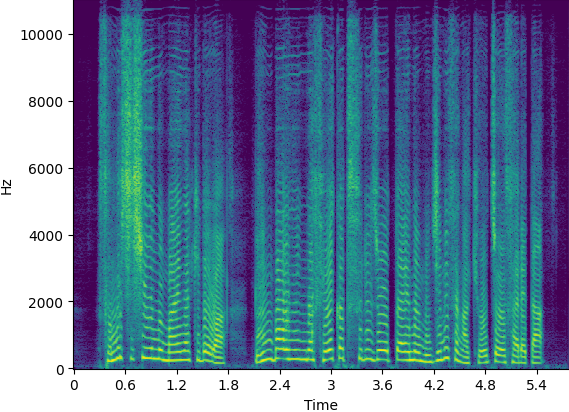

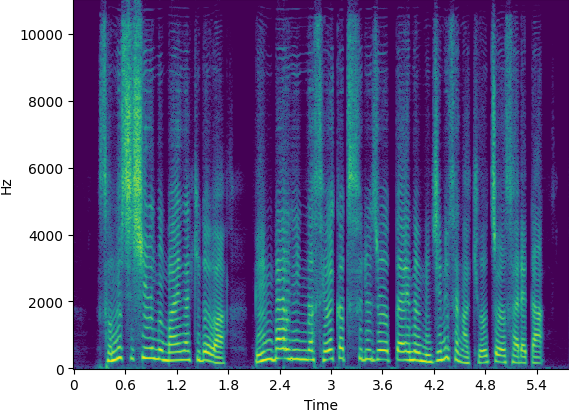

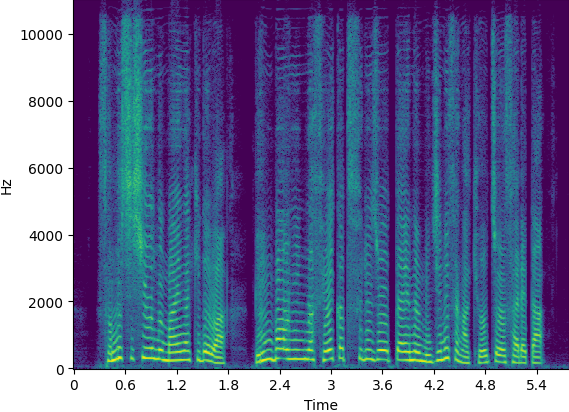

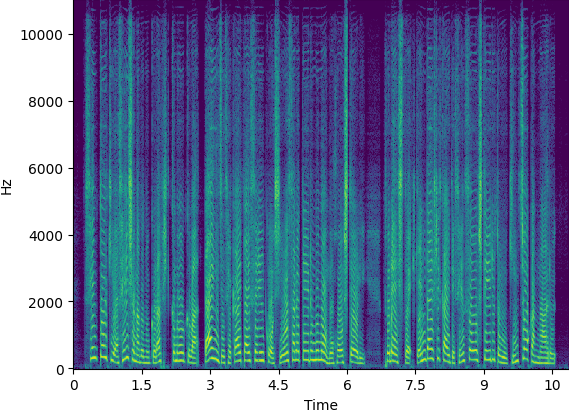

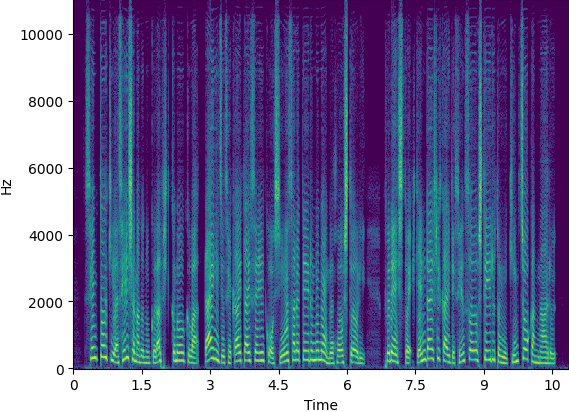

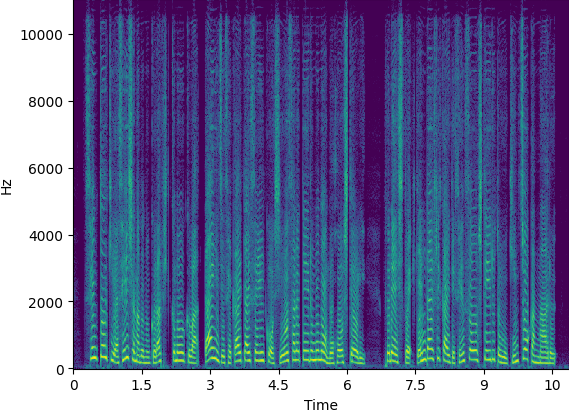

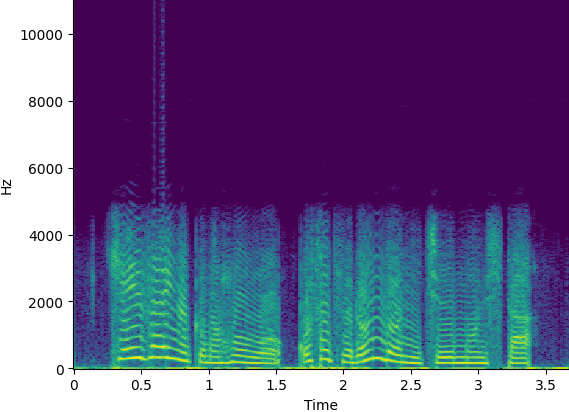

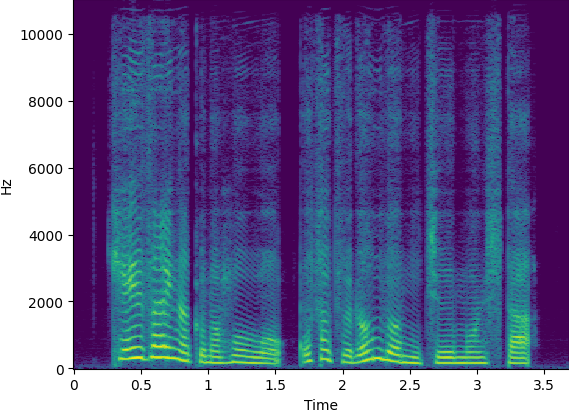

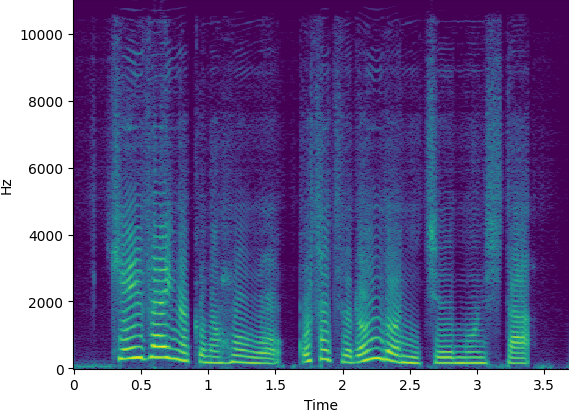

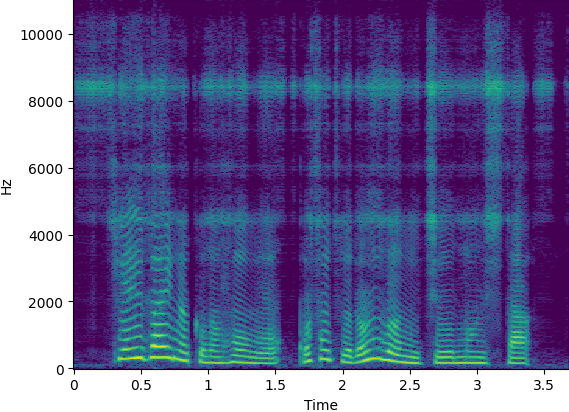

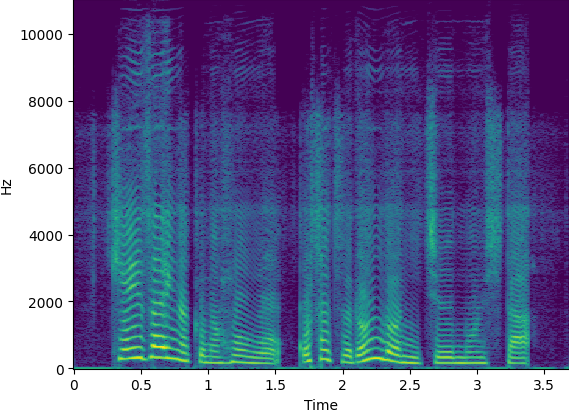

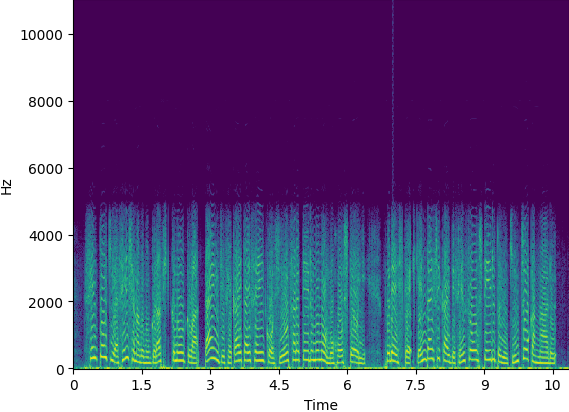

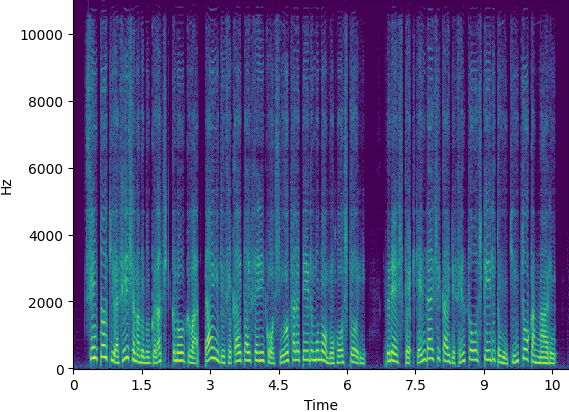

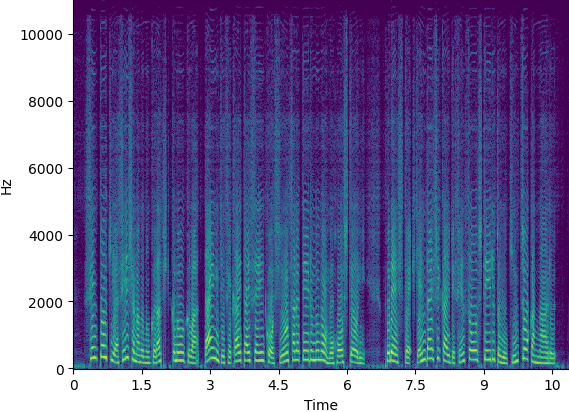

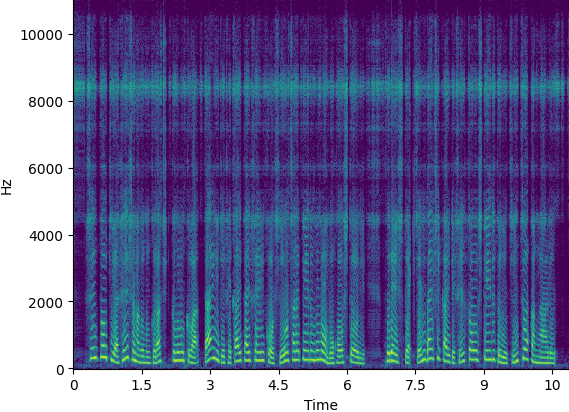

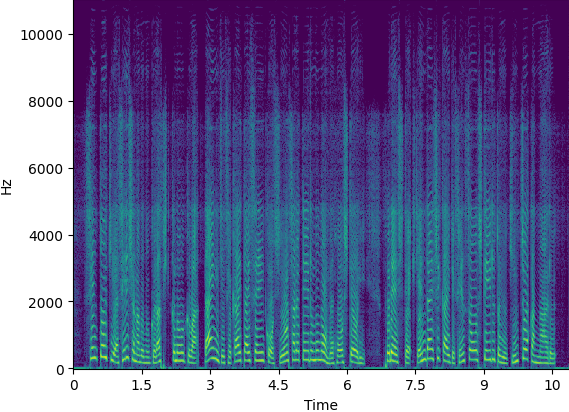

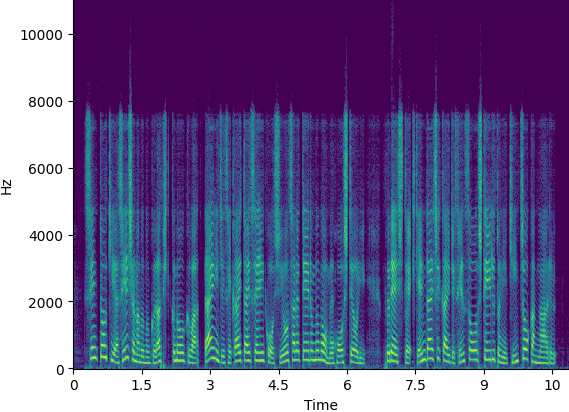

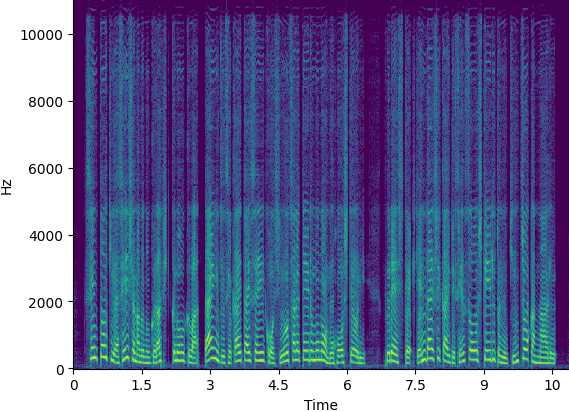

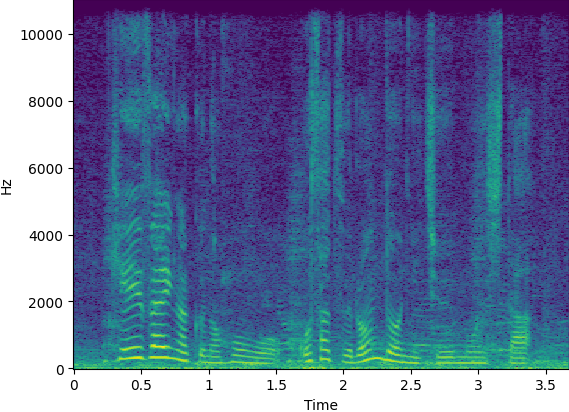

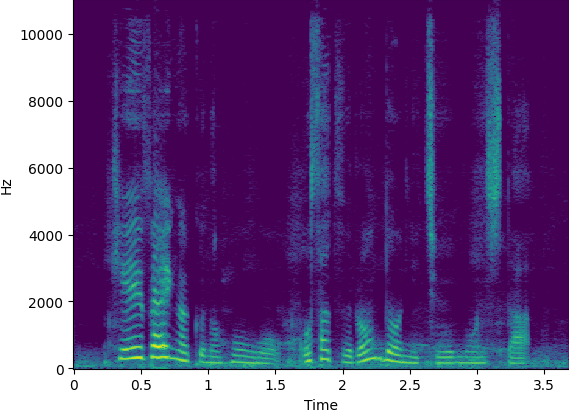

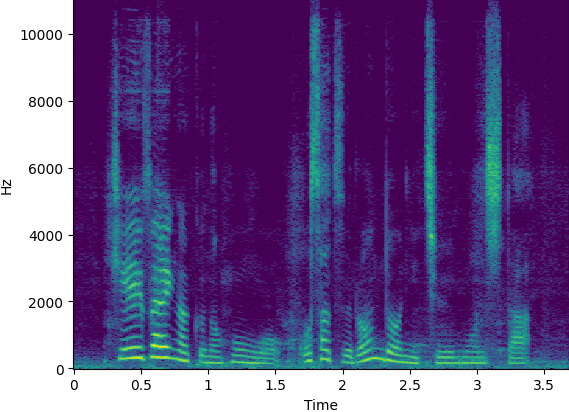

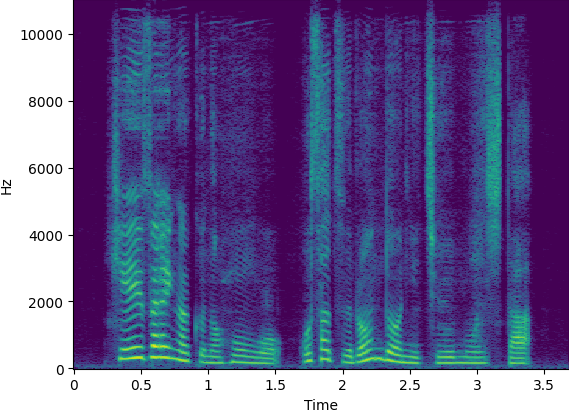

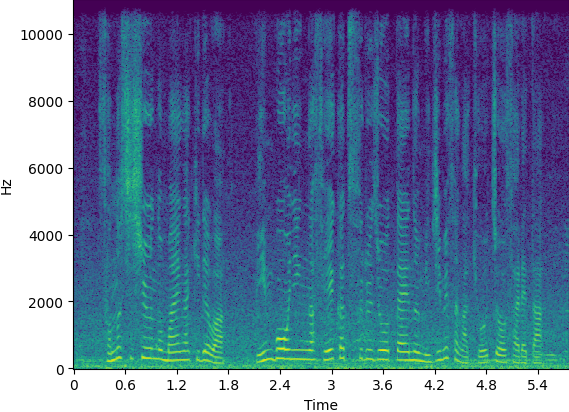

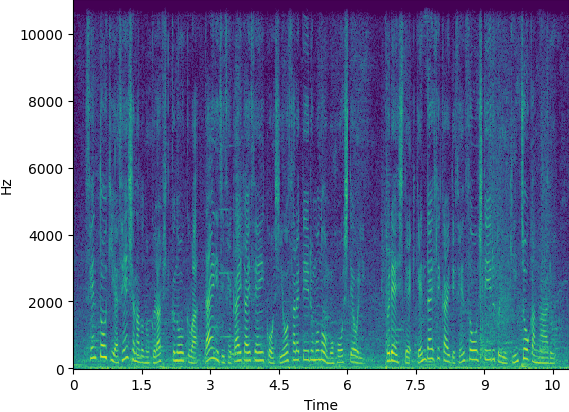

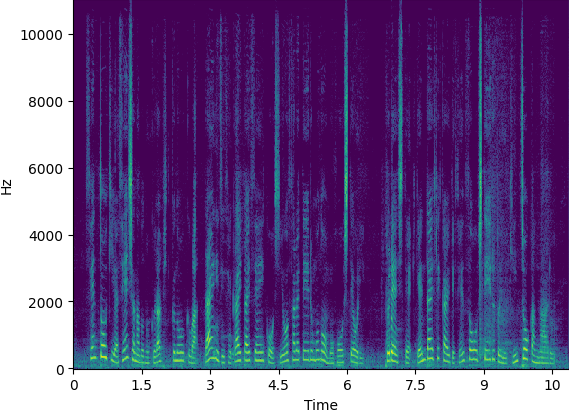

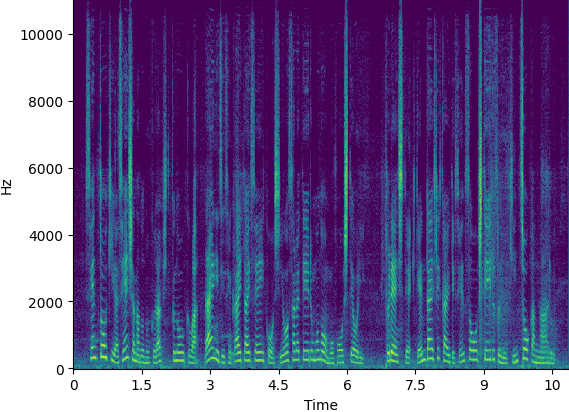

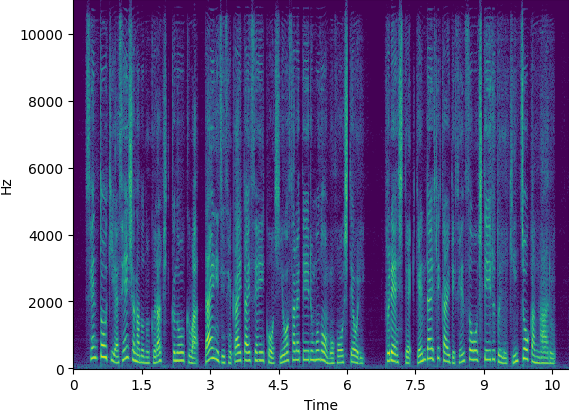

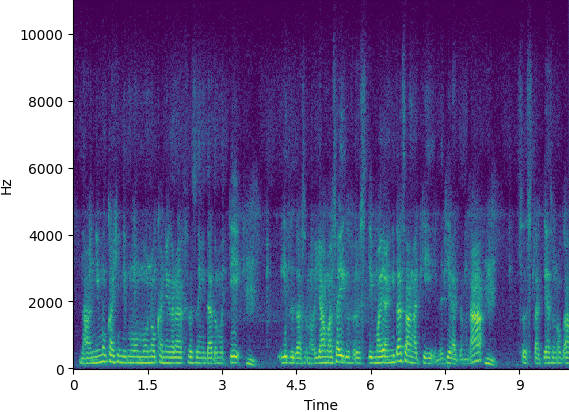

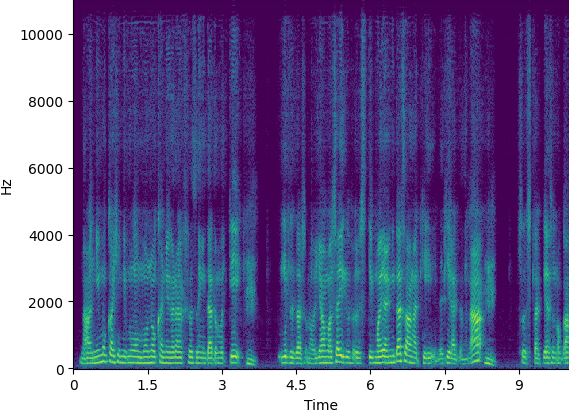

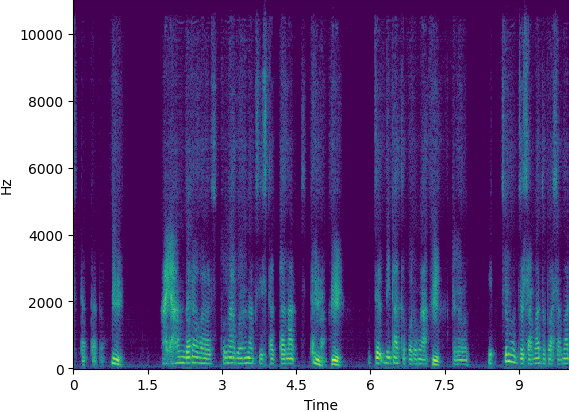

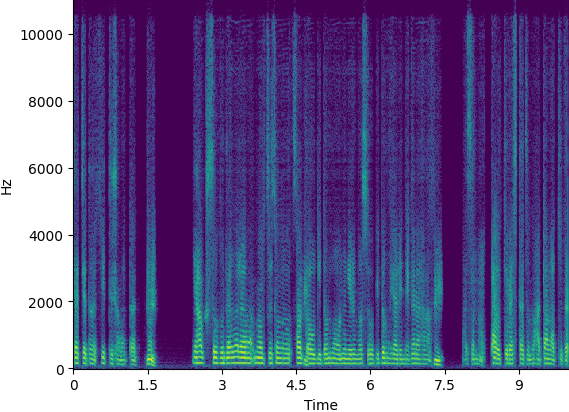

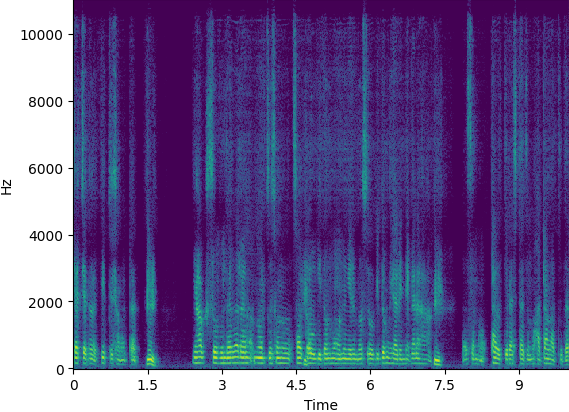

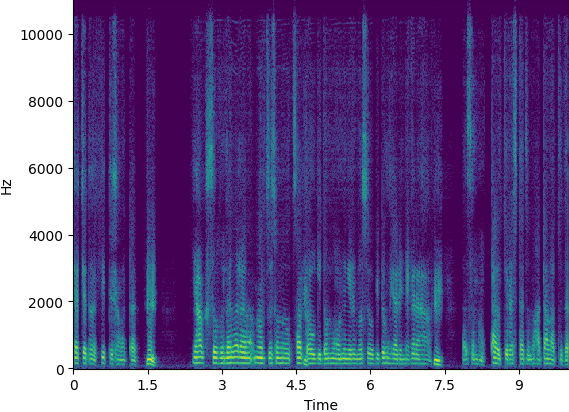

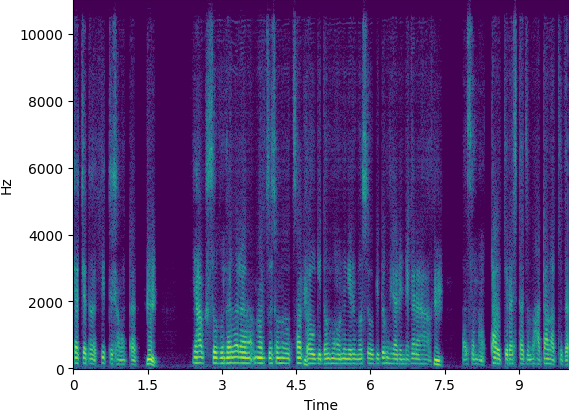

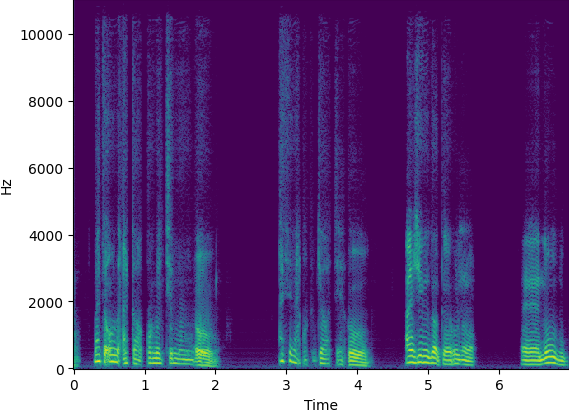

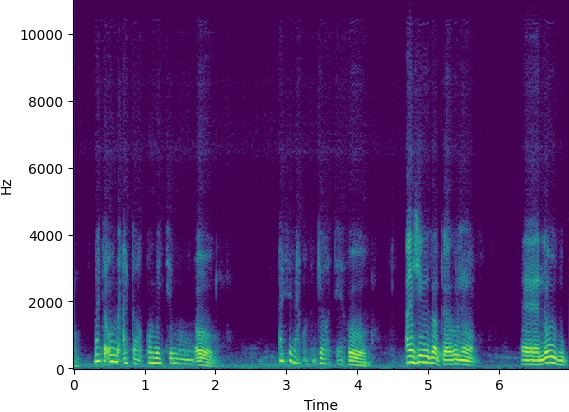

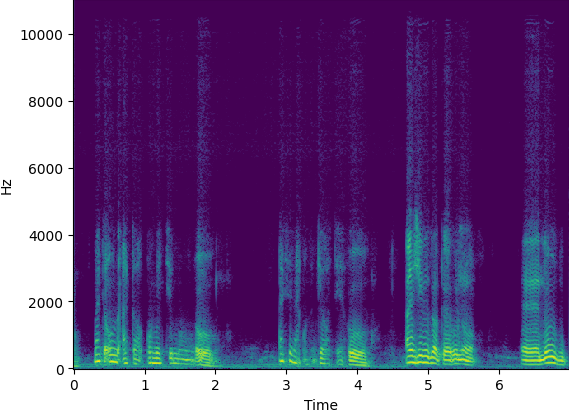

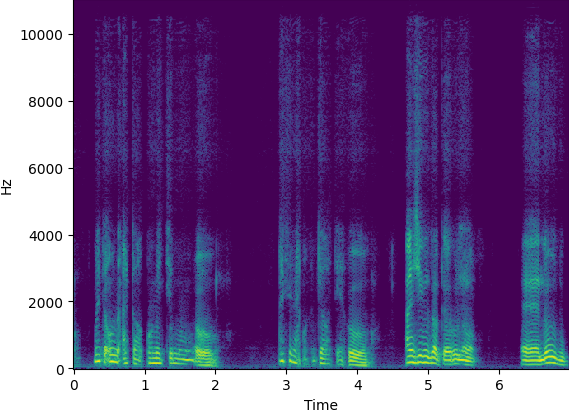

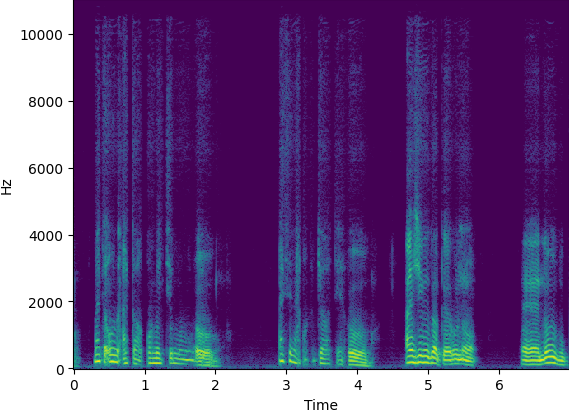

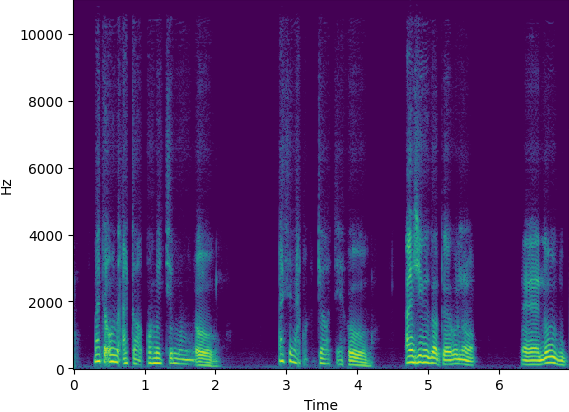

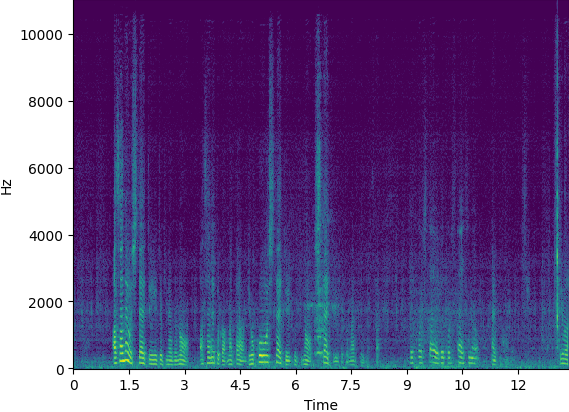

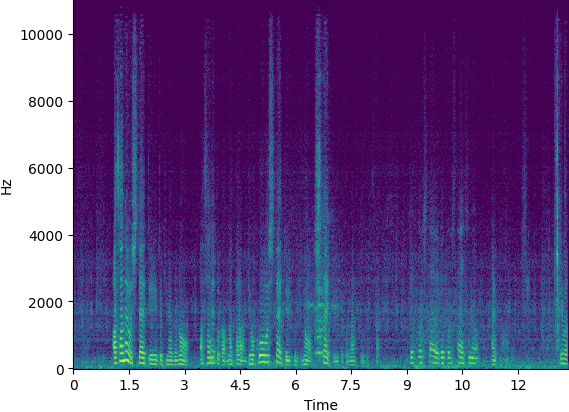

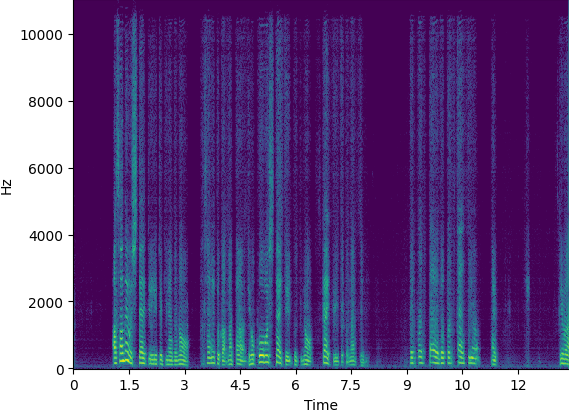

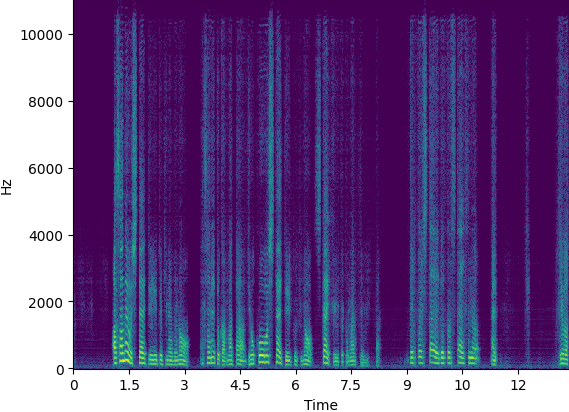

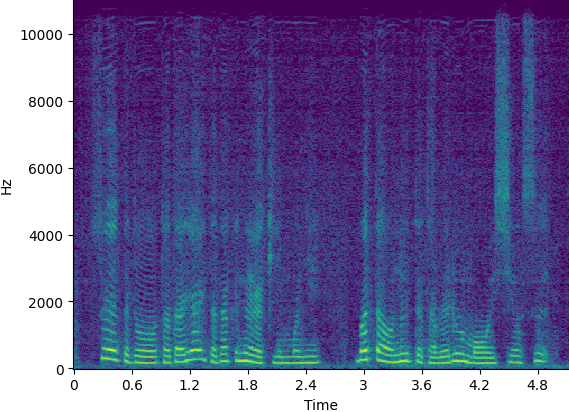

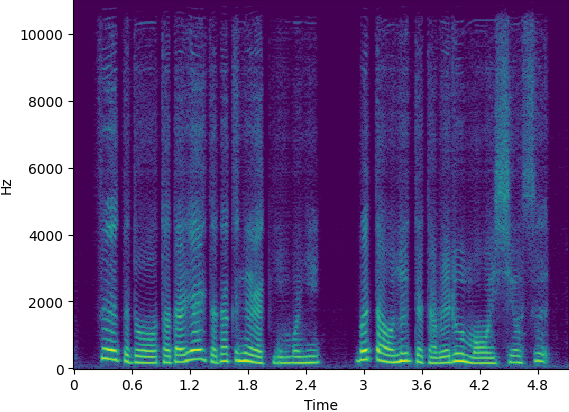

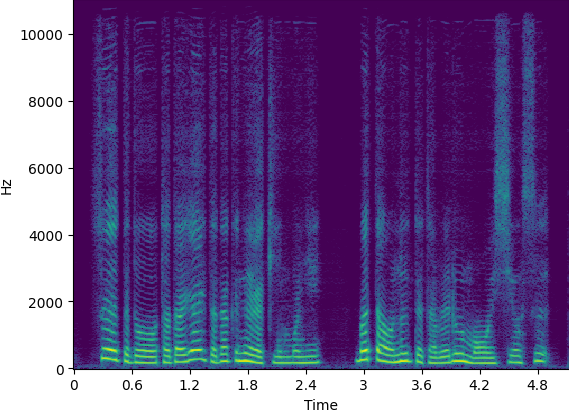

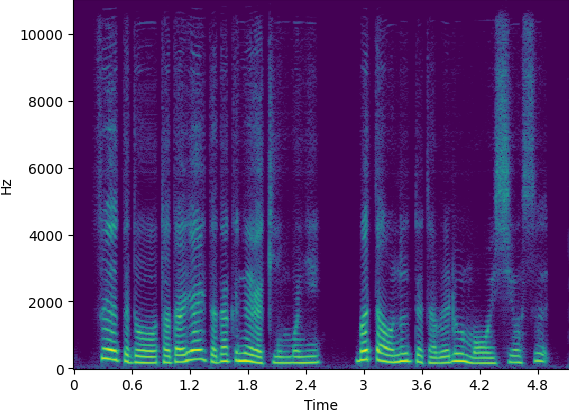

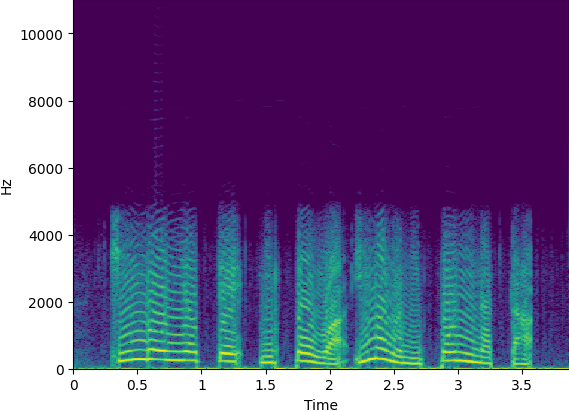

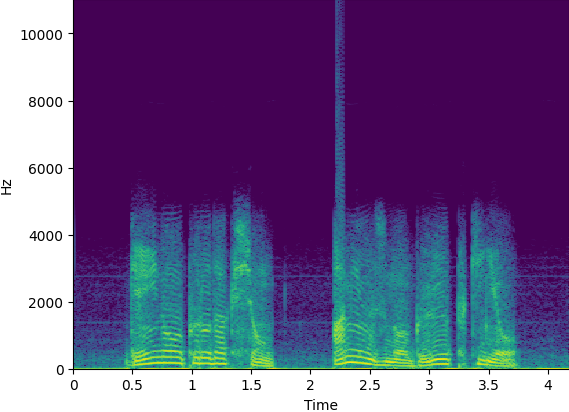

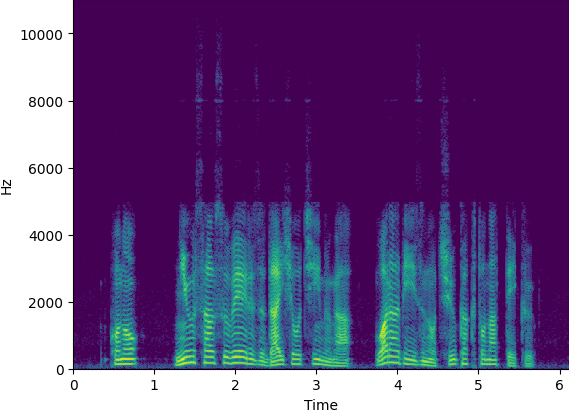

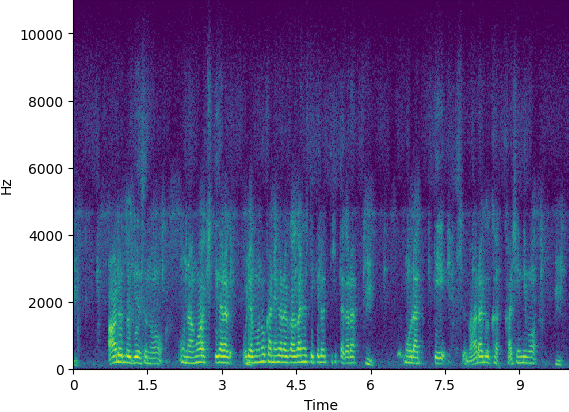

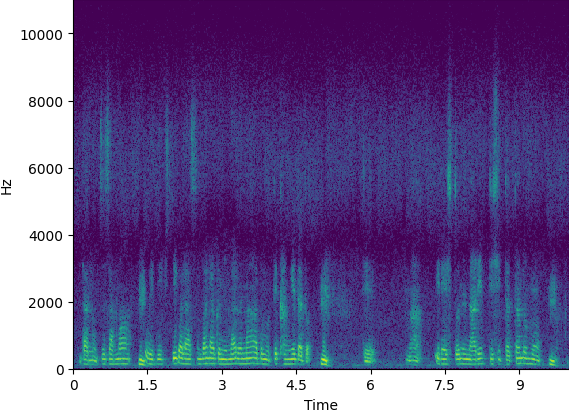

1. Speech restoration demo with simulated data

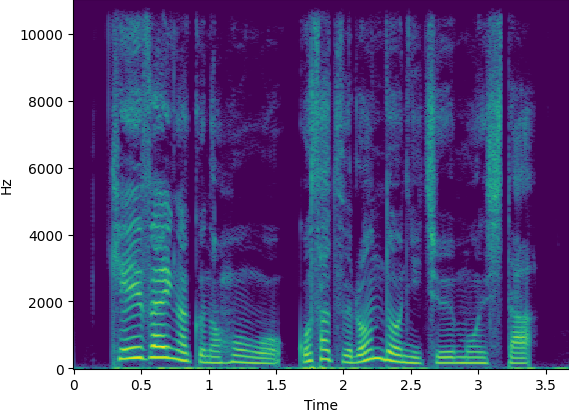

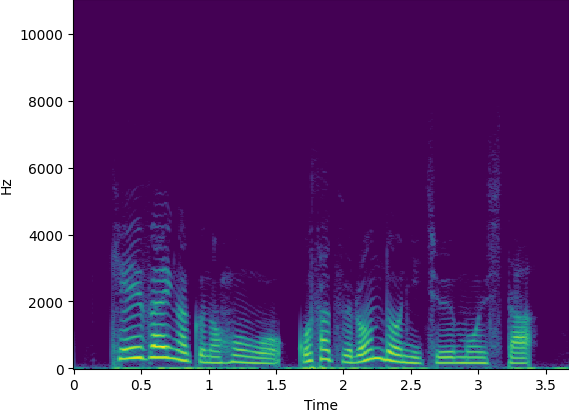

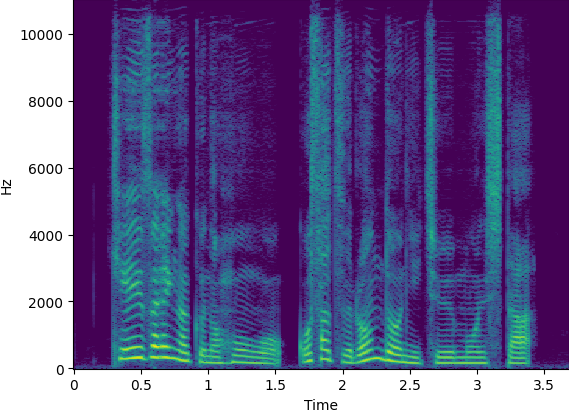

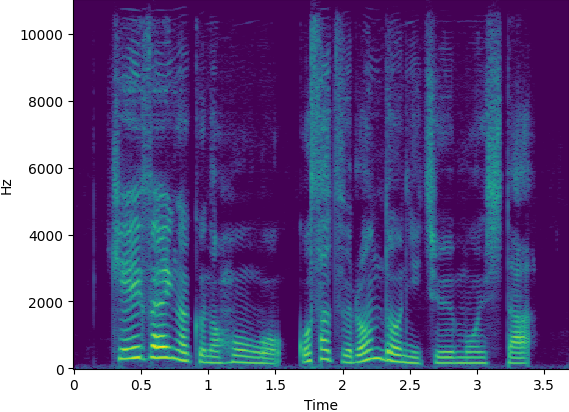

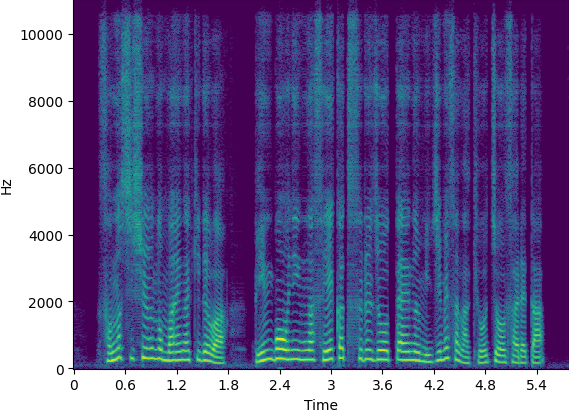

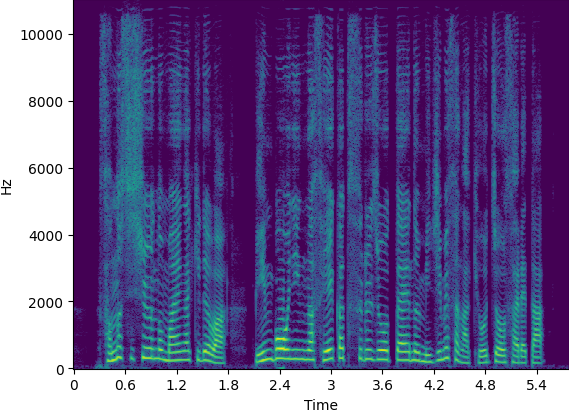

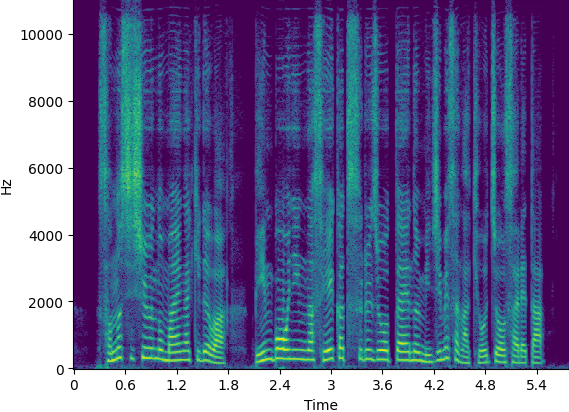

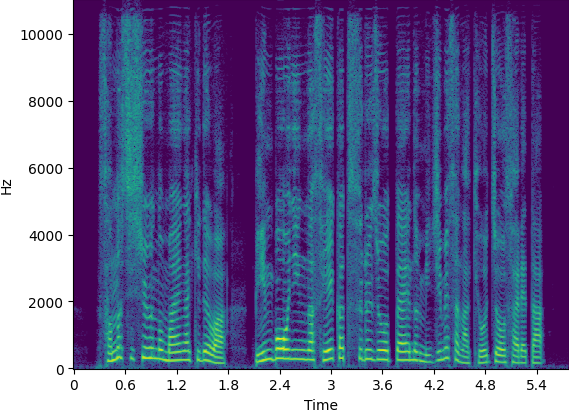

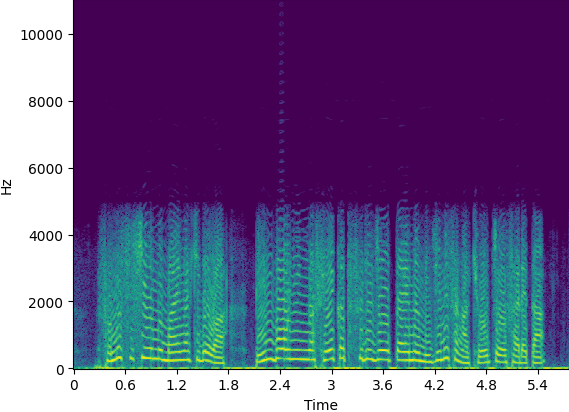

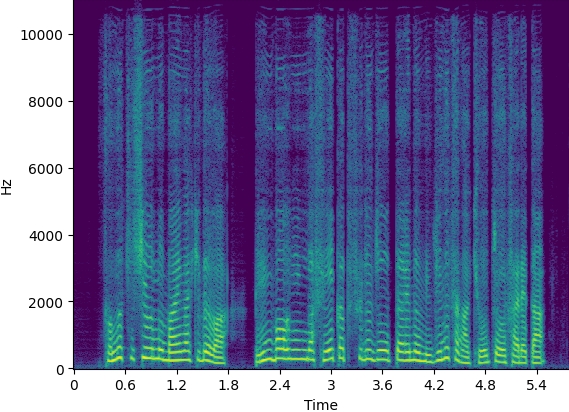

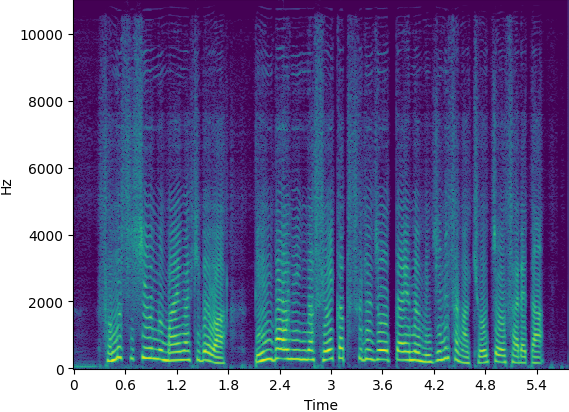

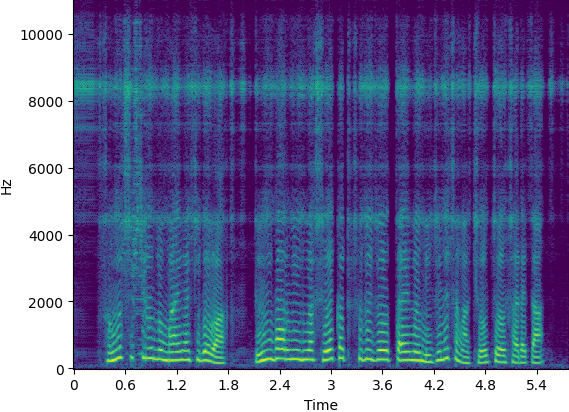

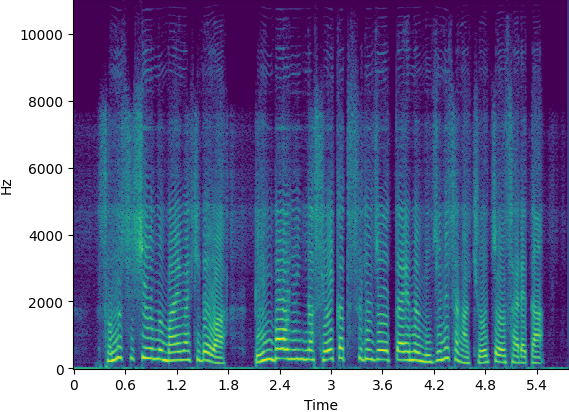

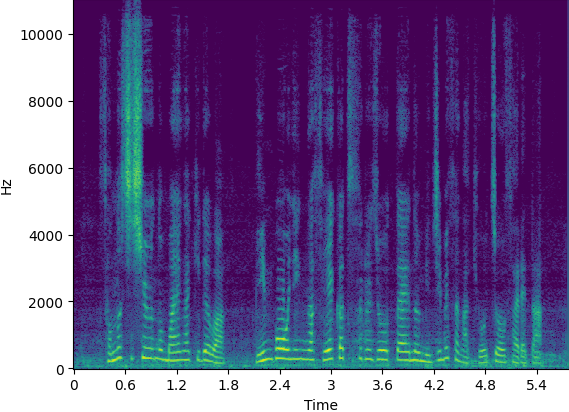

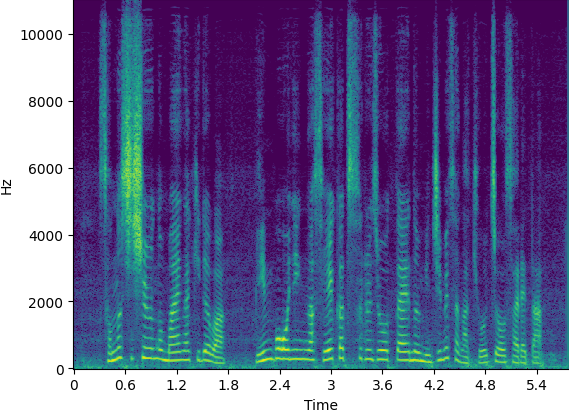

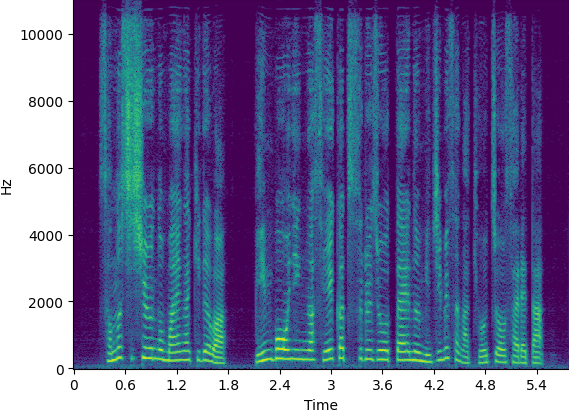

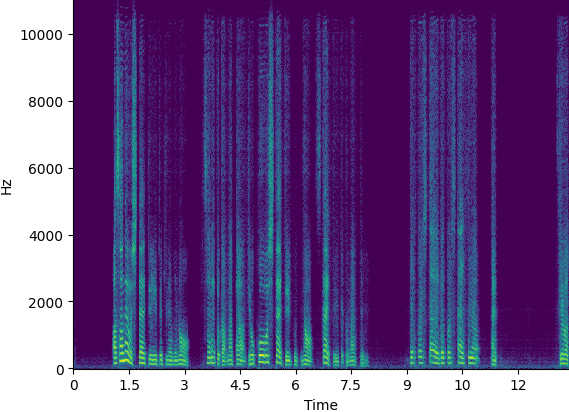

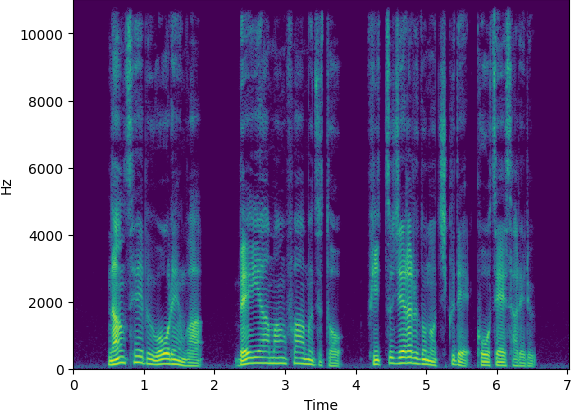

(1.a) Band-limited| Utterance | Groundtruth | Original | Supervised [1] | Self-Mel | Self-SF | Self-Mel-RefEnc | Self-Mel-PLoss | Semi-Mel |

|---|---|---|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

|

|

|

| Sample2 |  |

|

|

|

|

|

|

|

| Sample3 |  |

|

|

|

|

|

|

|

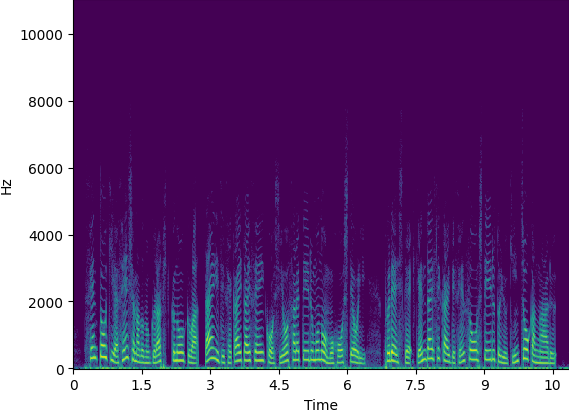

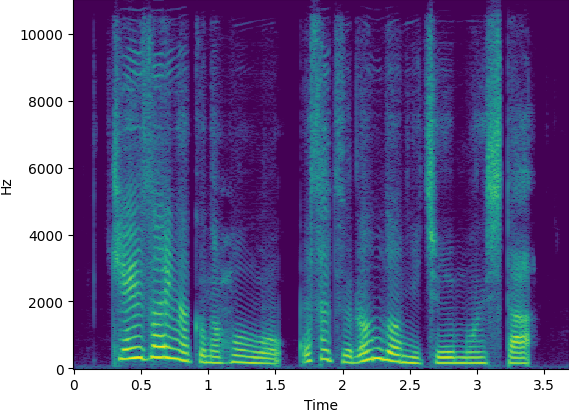

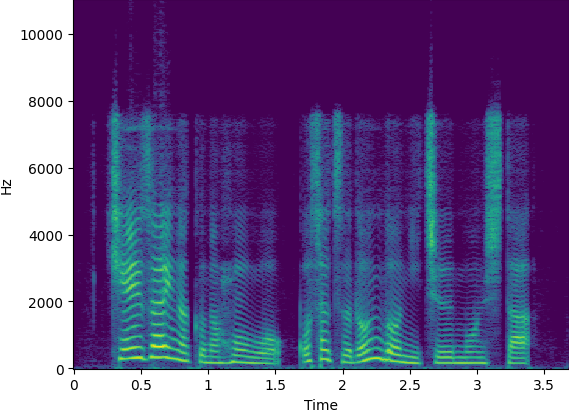

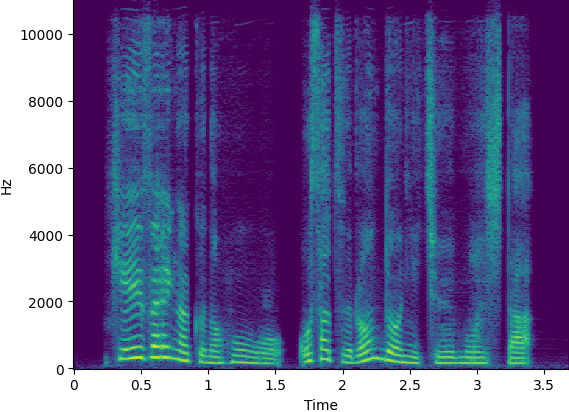

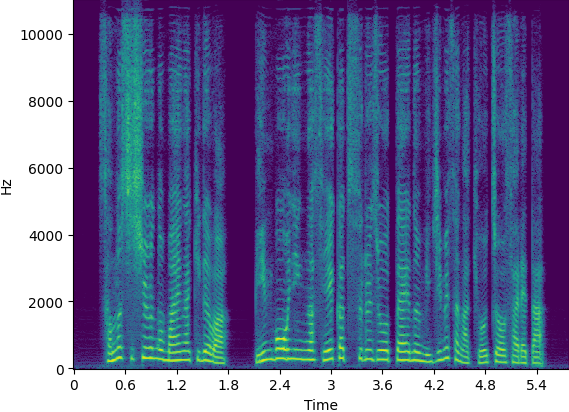

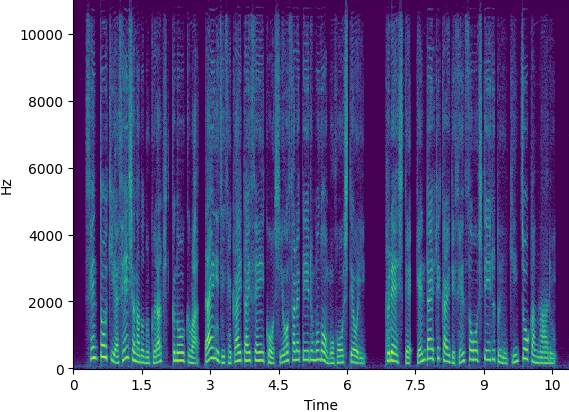

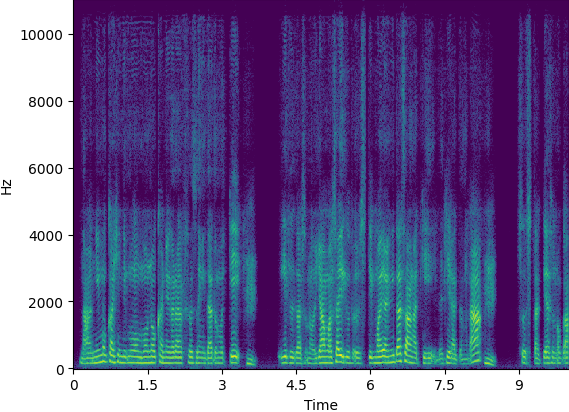

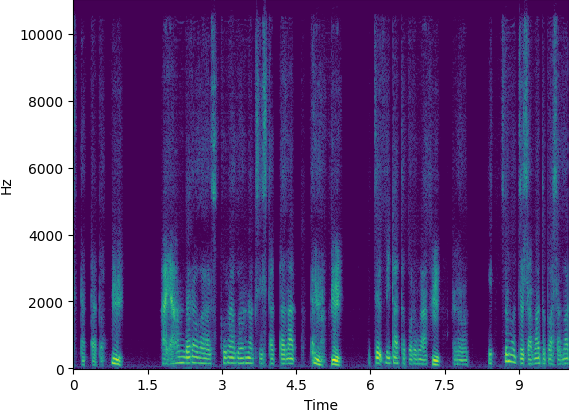

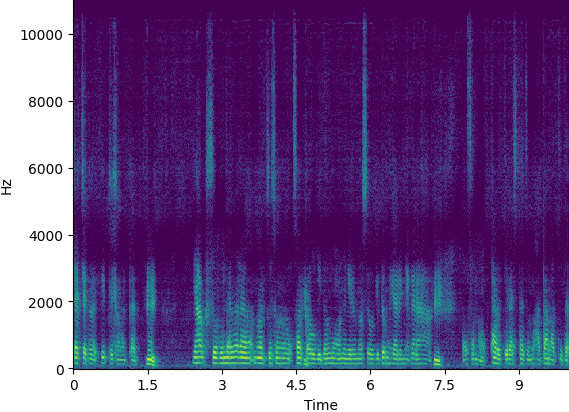

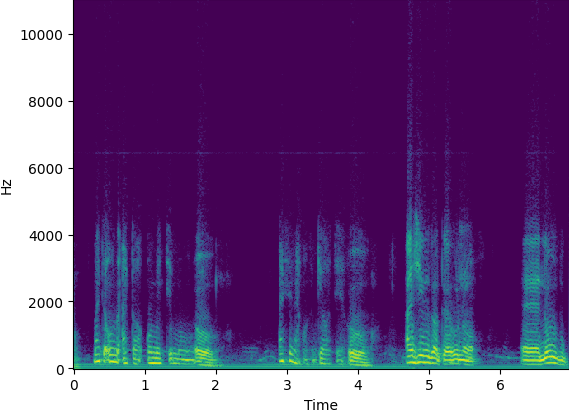

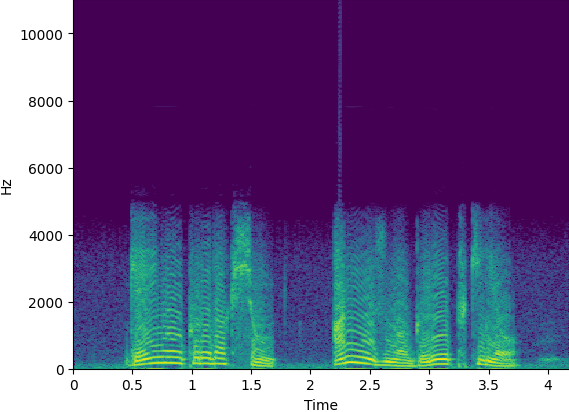

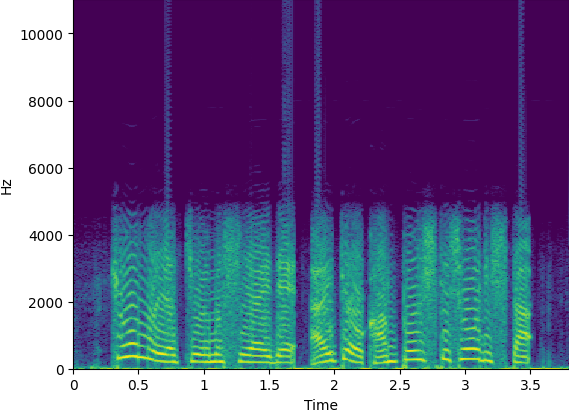

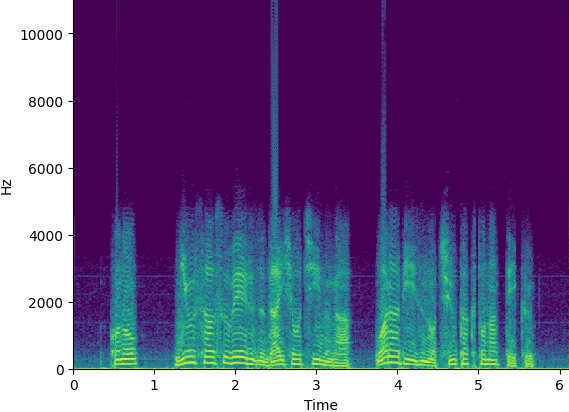

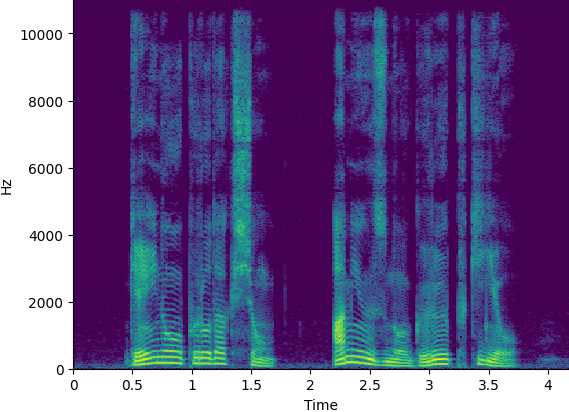

(1.b) Clipped

| Utterance | Groundtruth | Original | Supervised [1] | Self-Mel | Self-SF | Self-Mel-RefEnc | Self-Mel-PLoss | Semi-Mel |

|---|---|---|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

|

|

|

| Sample2 |  |

|

|

|

|

|

|

|

| Sample3 |  |

|

|

|

|

|

|

|

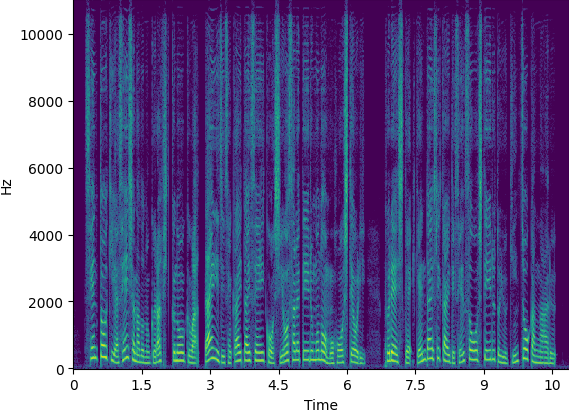

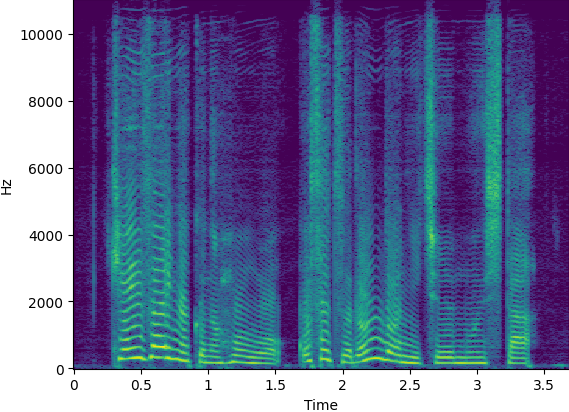

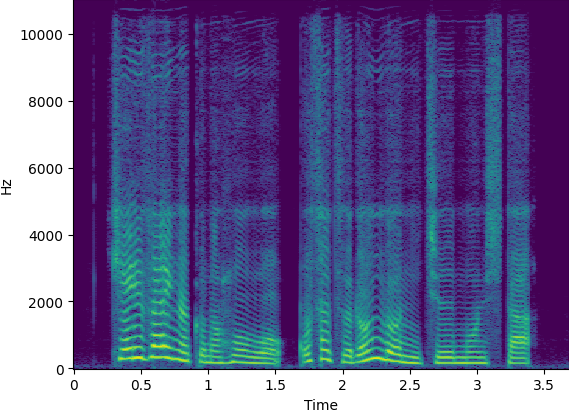

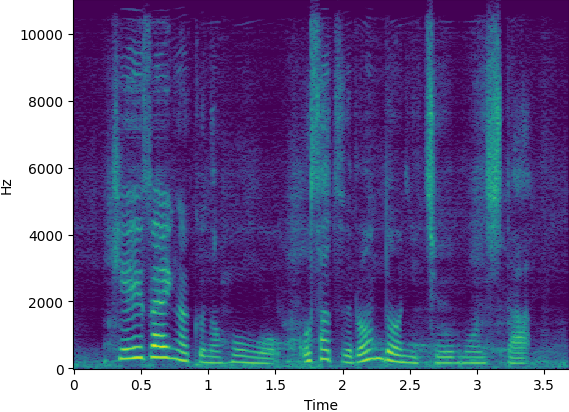

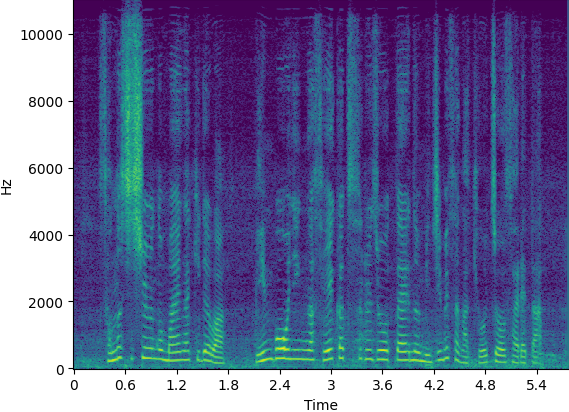

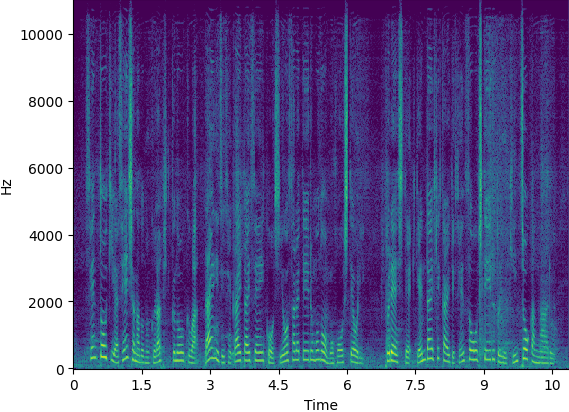

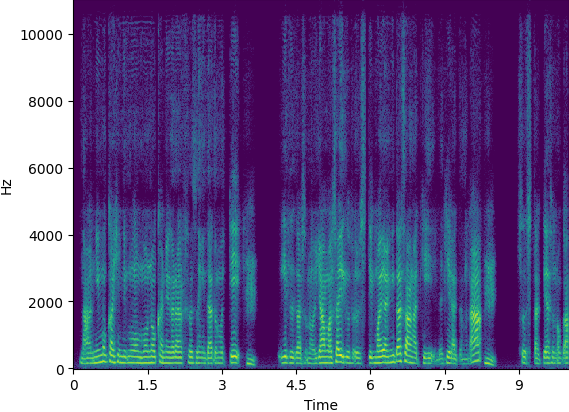

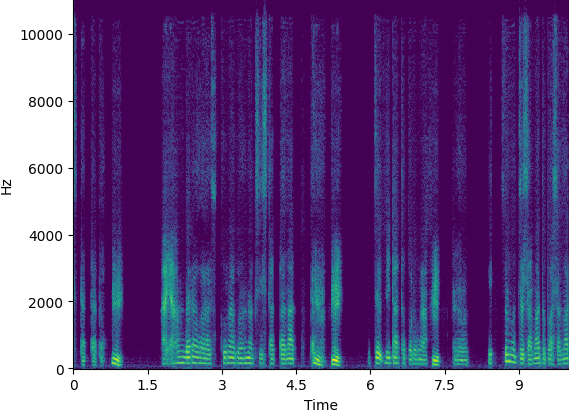

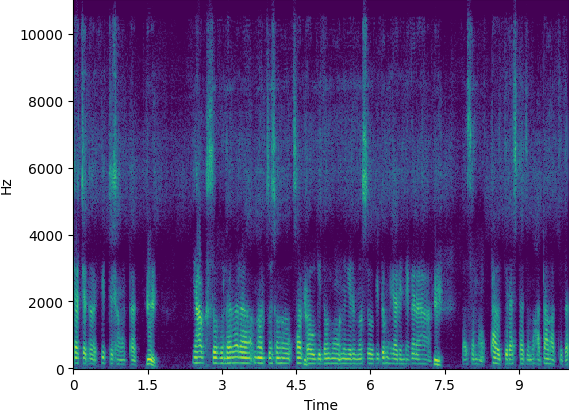

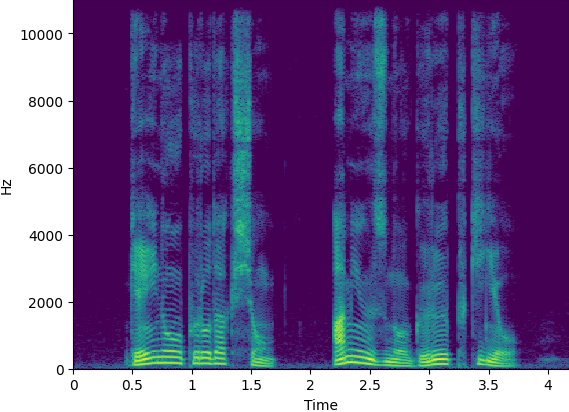

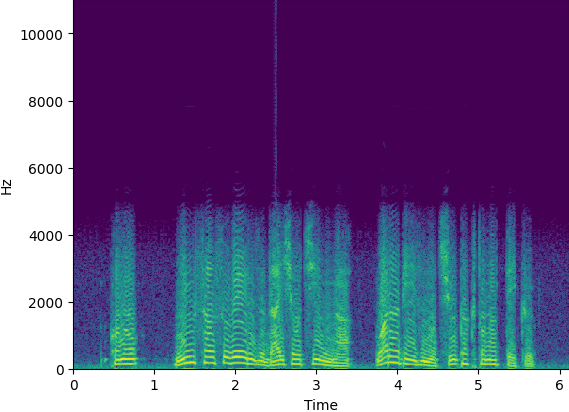

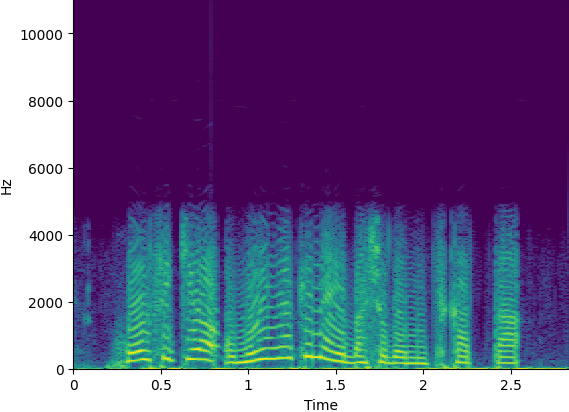

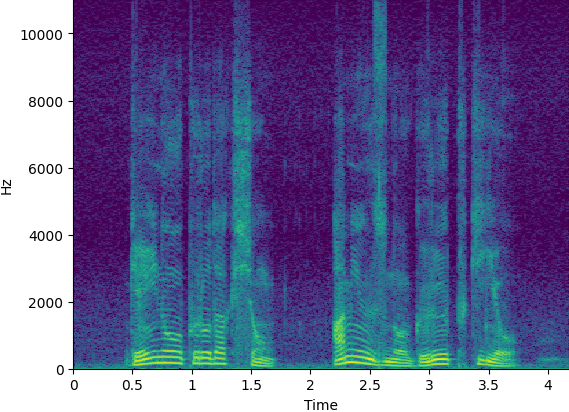

(1.c) Mu-Law

| Utterance | Groundtruth | Original | Supervised [1] | Self-Mel | Self-SF | Self-Mel-RefEnc | Self-Mel-PLoss | Semi-Mel |

|---|---|---|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

|

|

|

| Sample2 |  |

|

|

|

|

|

|

|

| Sample3 |  |

|

|

|

|

|

|

|

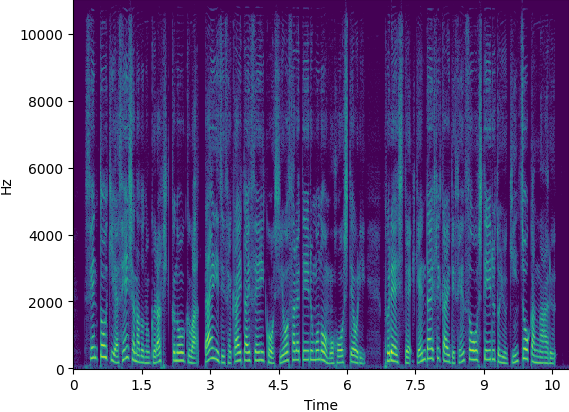

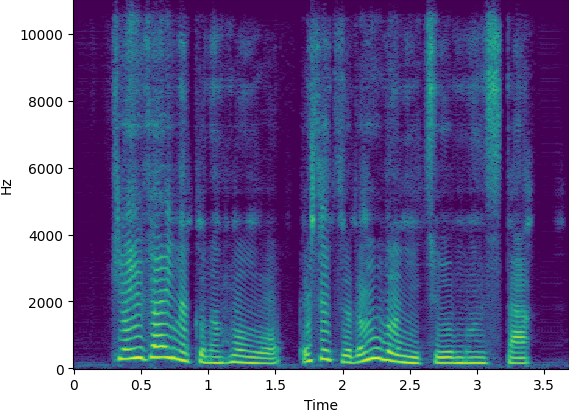

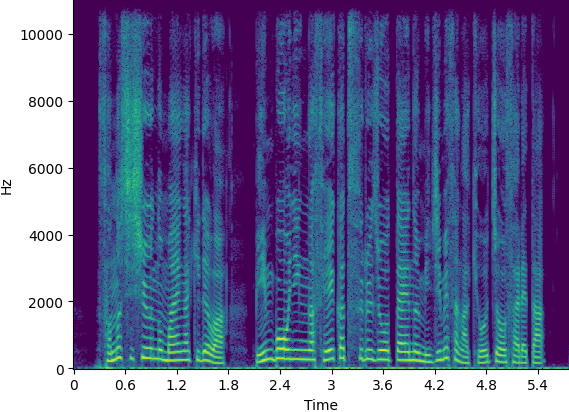

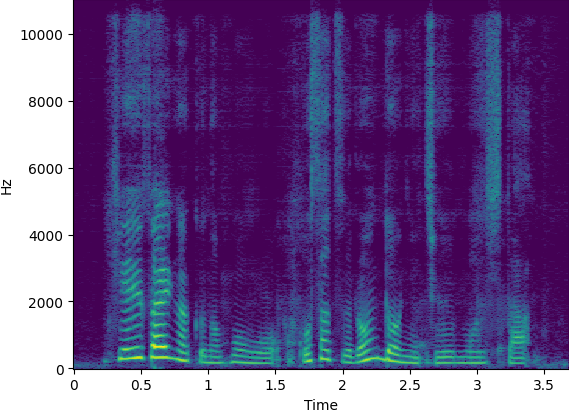

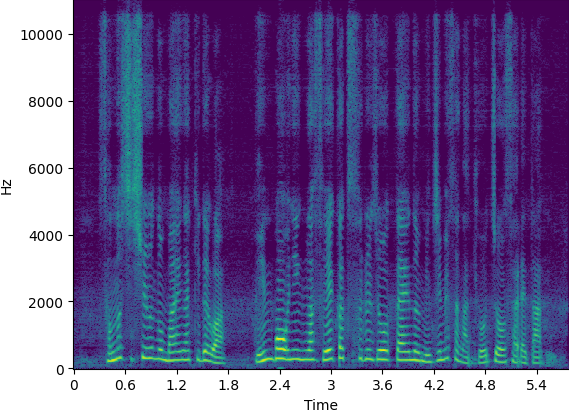

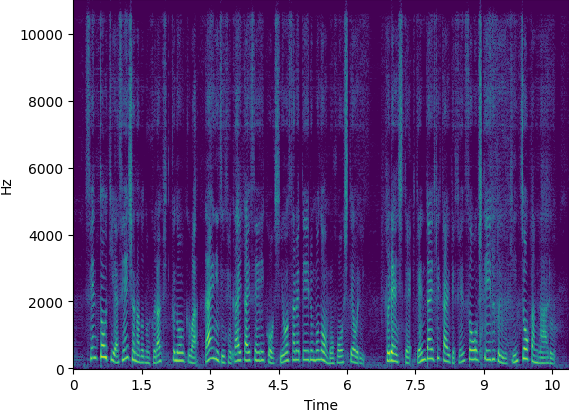

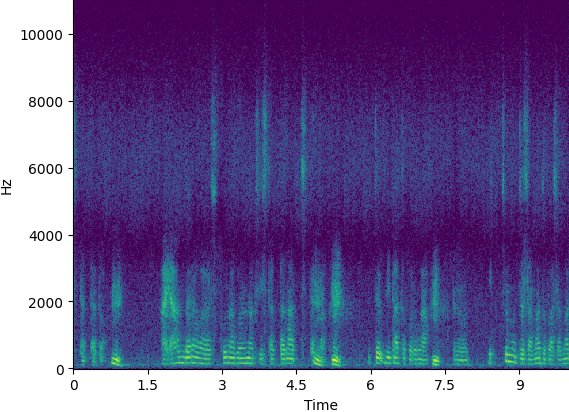

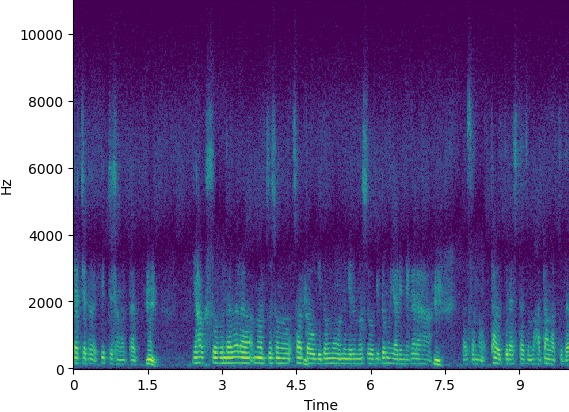

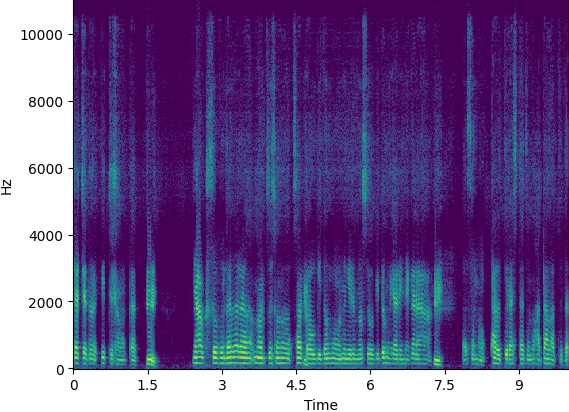

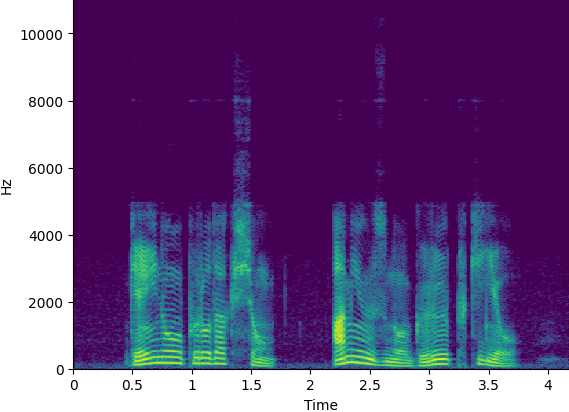

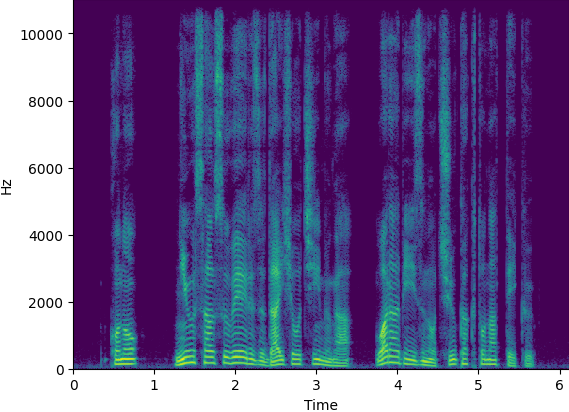

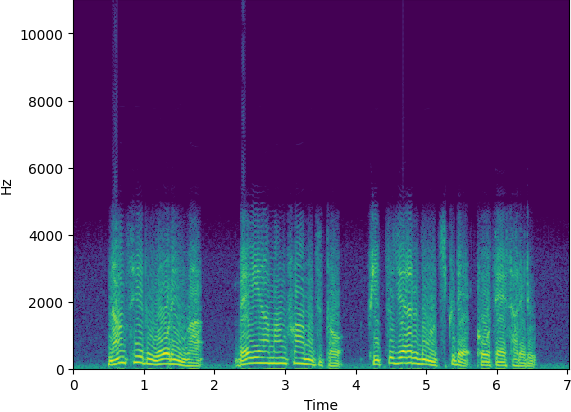

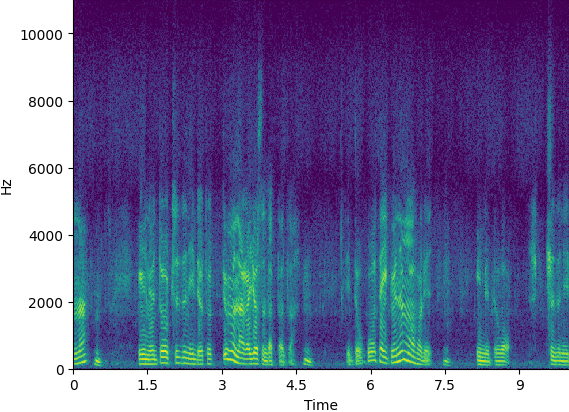

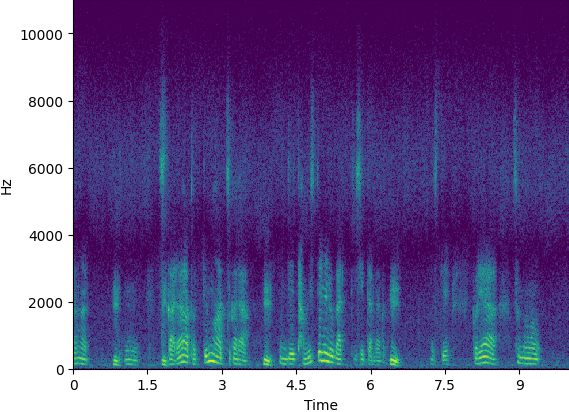

(1.d) Additive Noise

| Utterance | Groundtruth | Original | Supervised [1] | Self-Mel | Self-SF | Self-Mel-RefEnc | Self-Mel-PLoss | Semi-Mel |

|---|---|---|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

|

|

|

| Sample2 |  |

|

|

|

|

|

|

|

| Sample3 |  |

|

|

|

|

|

|

|

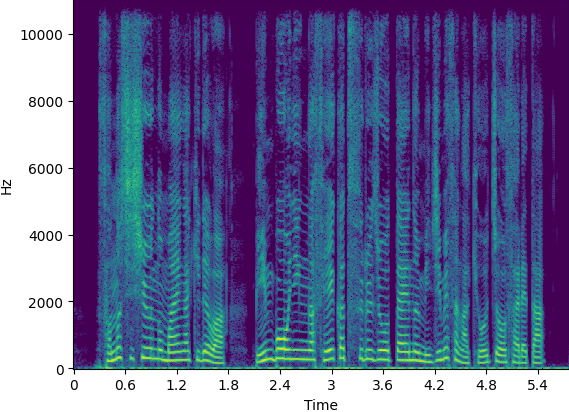

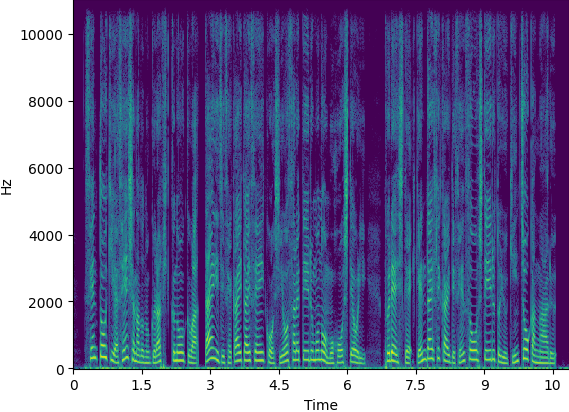

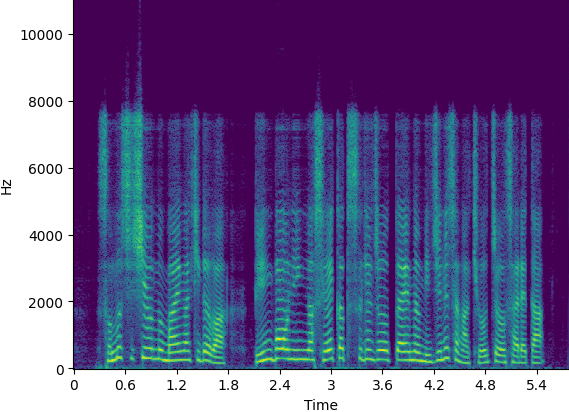

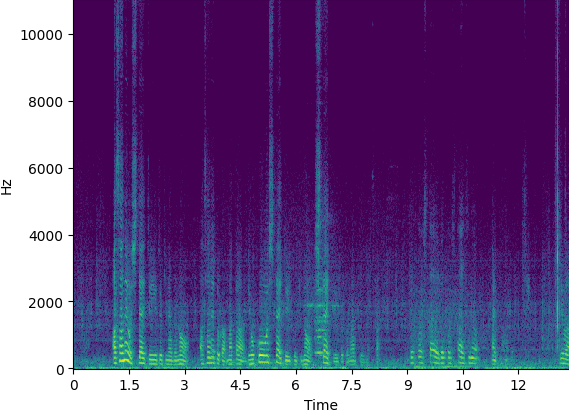

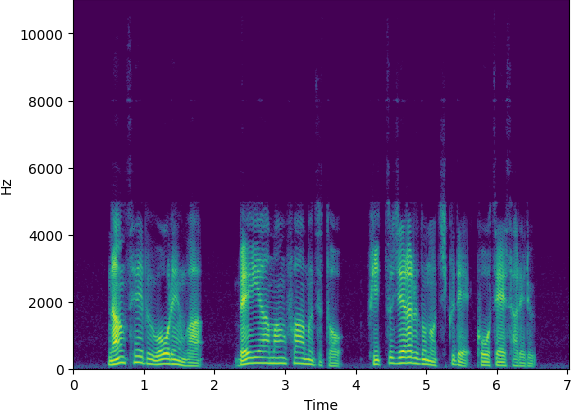

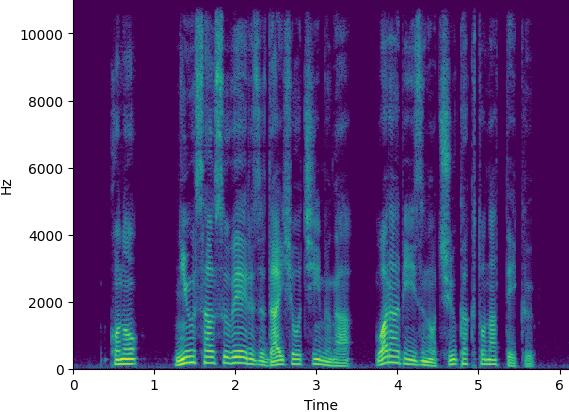

2. Speech resoration demo with single-domain real data

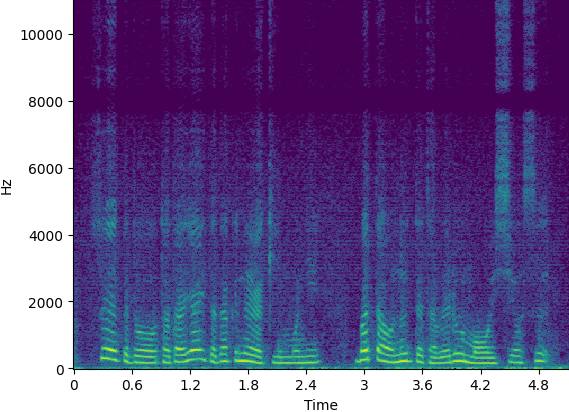

(2.a) Story telling of Japanese old tales| Utterance | Original | Supervised [1] | Self-Mel | Self-Mel-Pretrain | Self-Mel-Pretrain-PLoss | Semi-Mel | Semi-Mel-Pretrain | Semi-Mel-Pretrain-PLoss |

|---|---|---|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

|

|

|

| Sample2 |  |

|

|

|

|

|

|

|

| Sample3 |  |

|

|

|

|

|

|

|

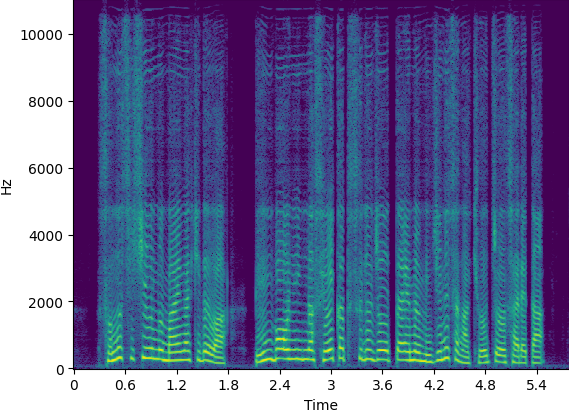

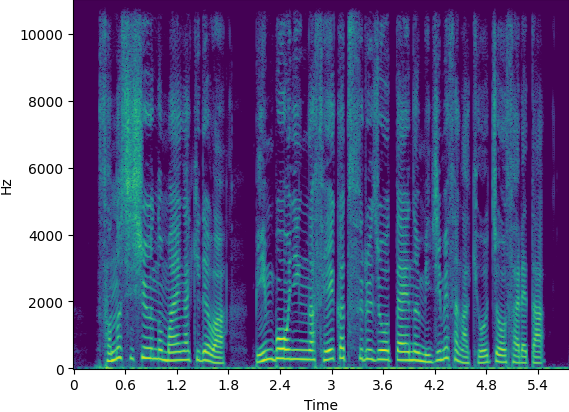

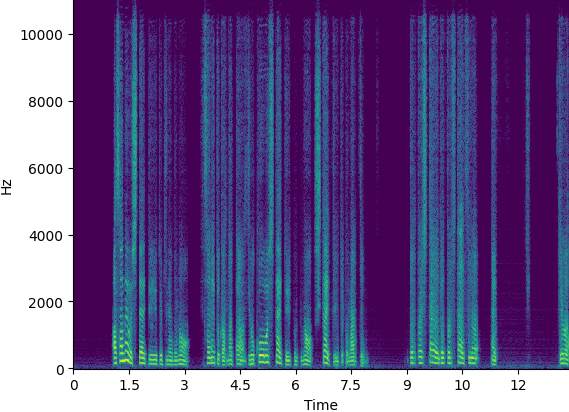

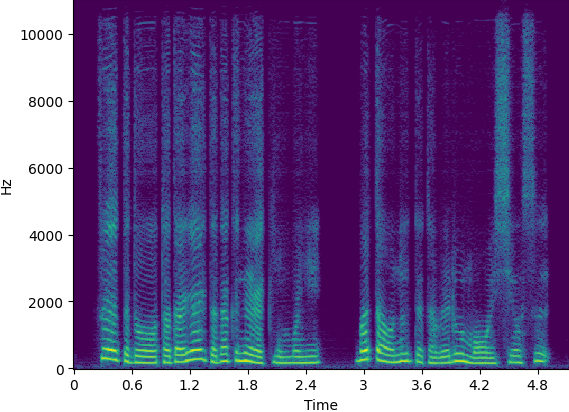

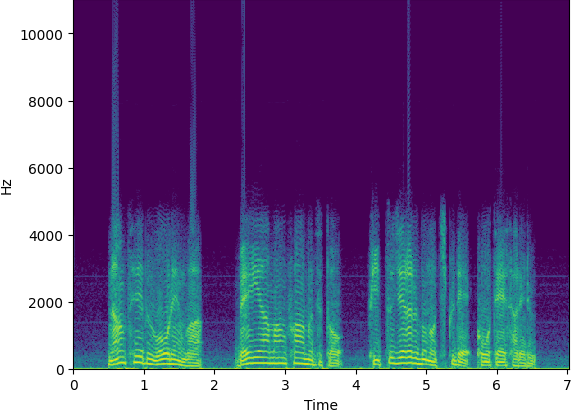

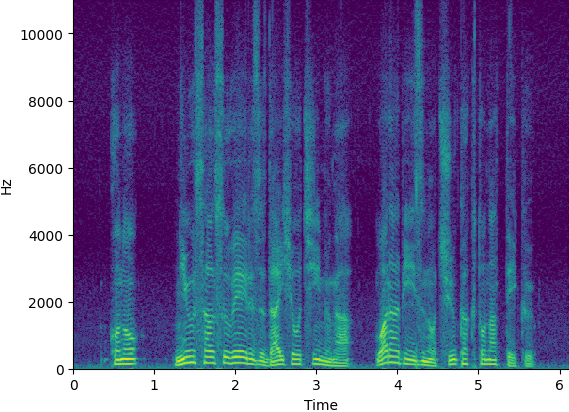

3. Speech resoration demo with multi-domain real data

(3.a) Domain1| Utterance | Original | Supervised [1] | Self-Mel | Self-Mel-Pretrain | Semi-Mel | Semi-Mel-Pretrain | Semi-Mel-Pretrain-PLoss |

|---|---|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

|

|

| Utterance | Original | Supervised [1] | Self-Mel | Self-Mel-Pretrain | Semi-Mel | Semi-Mel-Pretrain | Semi-Mel-Pretrain-PLoss |

|---|---|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

|

|

| Utterance | Original | Supervised [1] | Self-Mel | Self-Mel-Pretrain | Semi-Mel | Semi-Mel-Pretrain | Semi-Mel-Pretrain-PLoss |

|---|---|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

|

|

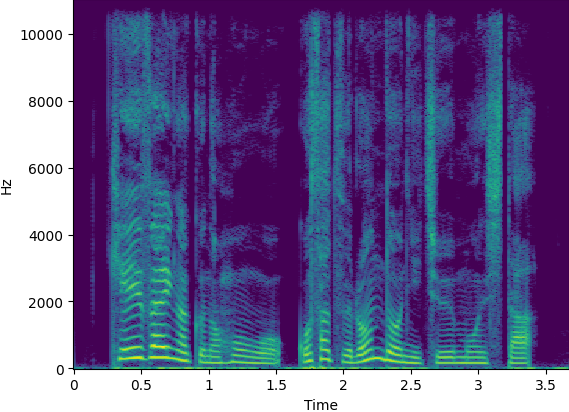

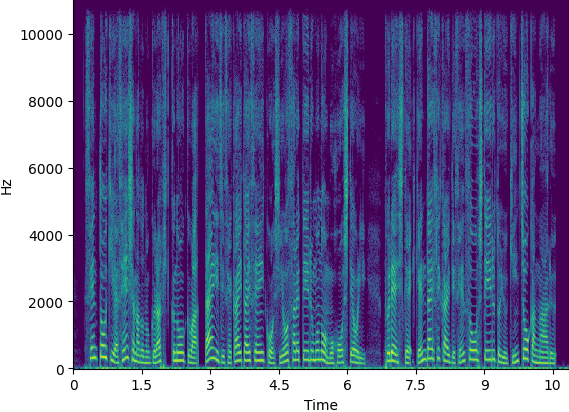

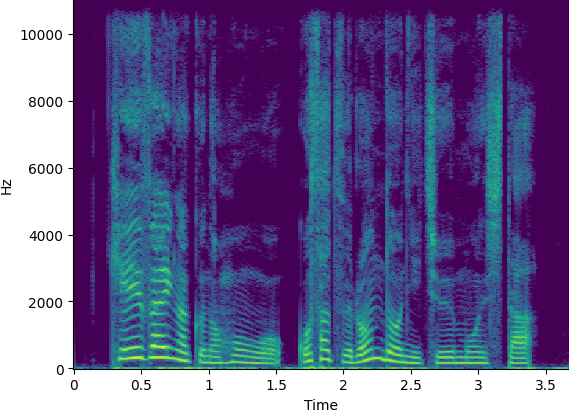

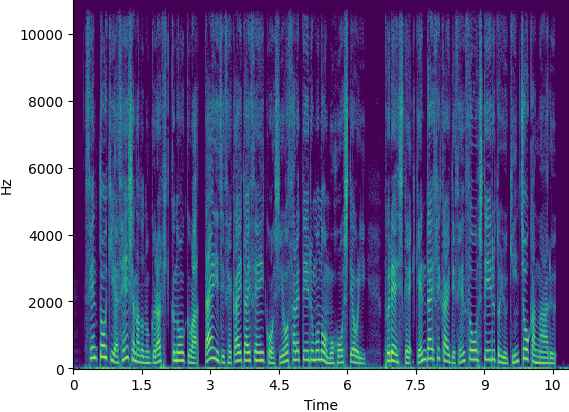

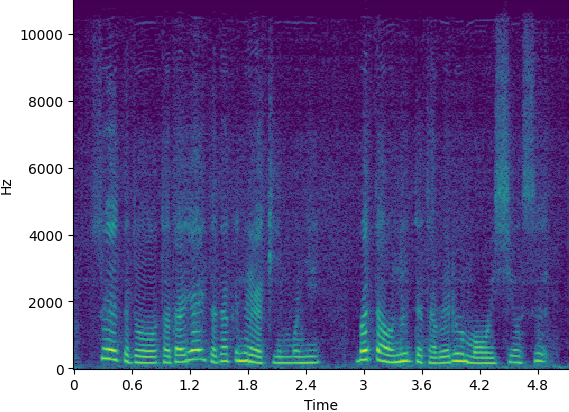

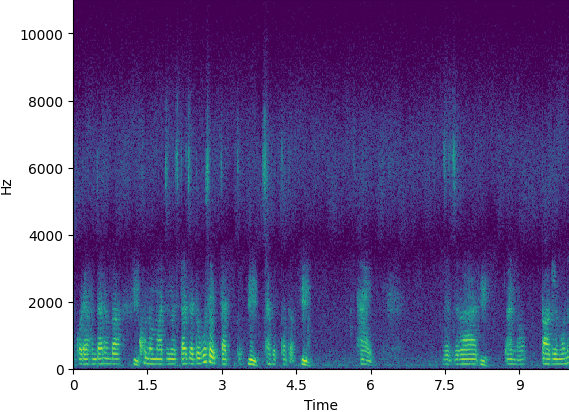

4. Audio effect transfer demo

(4.a) Simulated data (Mu-Law)| Utterance | Source | Reference | High-quality | Mean spec. diff. | Proposed |

|---|---|---|---|---|---|

| Sample1 |  |

|

|

|

|

| Sample2 |  |

|

|

|

|

| Sample3 |  |

|

|

|

|

(4.b) Real data (Story telling of Japanese old tales)

| Utterance | Source | Reference | High-quality | Proposed |

|---|---|---|---|---|

| Sample1 |  |

|

|

|

| Sample2 |  |

|

|

|

| Sample3 |  |

|

|

|

References

- H. Liu et al., "Voicefixer: Toward general speech restoration with neural vocoder,"

arXiv , vol. abs/2109.13731, 2021